National and International Educational Assessments: Overview, Results, and Issues

U.S. students participate in many assessments to track their educational achievement. Perhaps the most widely discussed of these are statewide assessments required by the Elementary and Secondary Education Act (ESEA), which was most recently comprehensively amended by the Every Student Succeeds Act (ESSA; P.L. 114-95). However, U.S. students also participate in large-scale national assessments, authorized by the National Assessment of Educational Progress Assessment Act (NAEPAA; Title III, Section 303 of P.L. 107-279), and international assessments, authorized by the Education Sciences Reform Act (ESRA; Title I, Section 153(a)(6) of P.L. 107-279). At the national level, students participate in the National Assessment of Educational Progress (NAEP). At the international level, U.S. students participate in the Trends in International Mathematics and Science Study (TIMSS), Progress in International Reading Literacy Study (PIRLS), and Program for International Student Assessment (PISA).

Although there are some similarities between statewide, national, and international assessments, they differ in purpose and level of reporting. For example, the purpose of statewide assessments is primarily to inform statewide accountability systems and provide information on individual achievement. By contrast, the purpose of large-scale assessments is to highlight achievement gaps, track national progress over time, compare achievement within the United States, and compare U.S. achievement to that of other countries. Results of these assessments are not reported for individuals.

National Assessments: The NAEP is a series of assessments measuring achievement in various content areas. The long-term trends NAEP (LTT NAEP) has tracked achievement since the 1970s and has remained relatively unchanged. The main NAEP assessment has tracked achievement since the 1990s and changes periodically to reflect changes in school curricula. The main NAEP has three levels: national, state, and Trial Urban District Assessment (TUDA). States that receive Title I-A funding under the ESEA are required to participate in biennial state NAEP assessments in reading and mathematics for 4th and 8th grade. Results from the 2017 main NAEP show a small but significant increase in 8th grade reading since 2015. There were no significant changes in 4th grade reading, 4th grade mathematics, or 8th grade mathematics since 2015. Longer term, however, average reading and mathematics scores have increased significantly since the initial administrations in the 1990s.

International Assessments: The United States participates in three international assessments: TIMSS, PIRLS, and PISA. TIMSS is an assessment of mathematics and science for 8th grade students. PIRLS is an assessment of reading literacy for 4th grade students. PISA is an assessment of reading literacy, mathematics literacy, and science literacy for 15 year old students. In general, U.S. students have made statistically significant gains since the initial administrations of international assessments; however, achievement did not consistently increase in the most recent administrations of international assessments.

Issues of Interpretation of National and International Assessments: Results of national and international assessments are difficult to interpret. One challenge is processing the large amount of data. Another is understanding the difference between statistical significance and educational significance. Reporting statistical significance is standard practice in research, but it does not convey the magnitude of a difference and its associated educational significance. Another issue is the tendency to focus narrowly on one assessment at one point in time. A narrow focus may not provide the appropriate context to interpret results accurately. International assessment results may also be affected by socioeconomic considerations within and across countries.

Comparing Results Across Assessments: Comparing results across national and international assessments can be challenging. Each assessment was created for a unique purpose by different groups of stakeholders, which makes direct comparisons difficult. There are a number of issues to consider when evaluating U.S. students’ performance across assessments. For example, consideration must be given to the differences in (1) the degree of alignment of content standards and assessments, (2) the target population being assessed, (3) the voluntary nature of student participation, (4) the participating education systems, (5) the scale of the assessment, and (6) the precision of measurement for each assessment.

National and International Educational Assessments: Overview, Results, and Issues

Jump to Main Text of Report

Contents

- Overview

- Introduction to Large-Scale Assessments

- Purposes of Large-Scale Assessments

- Participation

- Score Reporting

- Types of Large-Scale Assessments

- National Assessments: The National Assessment of Educational Progress

- Main NAEP Program

- LTT NAEP Program

- U.S. Performance on the National Assessment of Educational Progress

- Highlights from 2017 Main NAEP

- Highlights from LTT NAEP

- Achievement Gaps Reported by Main NAEP

- Achievement Gaps Reported by LTT NAEP

- International Assessments

- TIMSS

- U.S. Performance on TIMSS in Relation to Other Countries

- U.S. Performance on TIMSS over Time by Achievement Percentiles

- PIRLS

- U.S. Performance on PIRLS and ePIRLS in Relation to Other Countries

- U.S. Performance on PIRLS over Time by Achievement Levels

- PISA

- U.S. Performance on PISA in Relation to Other Countries

- U.S. Performance on PISA Over Time

- Issues of Interpretation in Large-Scale Assessments

- The "Significance" of Assessment Results

- The Narrow Focus on One Assessment

- Socioeconomic Considerations Across Countries

- Comparing Results Across Assessments

- NAEP and Statewide Assessments Comparisons

- NAEP and International Assessments

- Why Participate?

- NAEP Participation

- International Assessment Participation

- Limitations of NAEP and International Large-scale Assessments for Policy Consideration

- Identification and Implementation of Policies to Increase Achievement on the Basis of National and International Assessments

- Impact of Achievement on Economic Prosperity

- Concluding Thoughts

Figures

- Figure 1. Average Main NAEP Performance, 1990 to 2017

- Figure 2. Average Main NAEP Performance, 1990-2017, by Percentile of Achievement

- Figure 3. LTT NAEP Average Mathematics and Reading Scores for 9-, 13-, and 17-year old Students

- Figure 4. Trends in U.S. 4th and 8th Grade TIMSS Average Mathematics Scores by Achievement Level and Year

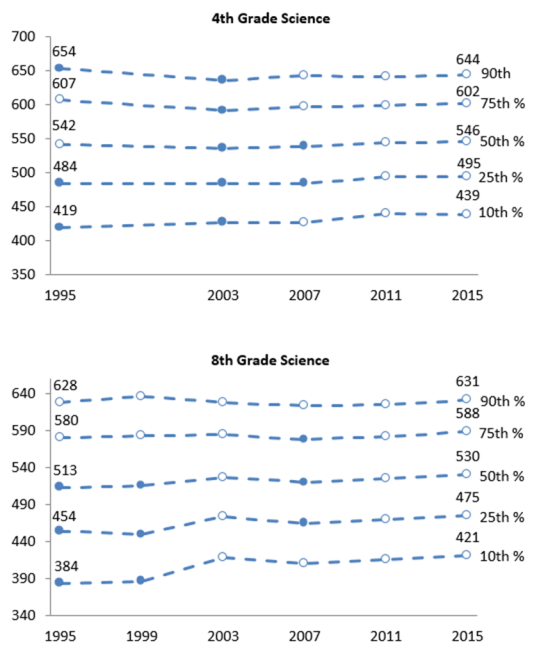

- Figure 5. Trends in U.S. 4th and 8th Grade TIMSS Average Science Scores by Achievement Level and Year

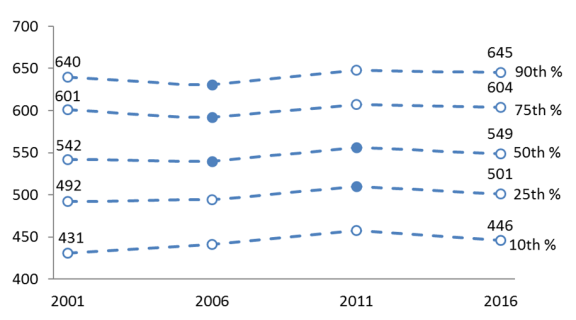

- Figure 6. Trends in U.S. 4th Grade Average PIRLS Reading Scores by Achievement Level and Year

Tables

Summary

U.S. students participate in many assessments to track their educational achievement. Perhaps the most widely discussed of these are statewide assessments required by the Elementary and Secondary Education Act (ESEA), which was most recently comprehensively amended by the Every Student Succeeds Act (ESSA; P.L. 114-95). However, U.S. students also participate in large-scale national assessments, authorized by the National Assessment of Educational Progress Assessment Act (NAEPAA; Title III, Section 303 of P.L. 107-279), and international assessments, authorized by the Education Sciences Reform Act (ESRA; Title I, Section 153(a)(6) of P.L. 107-279). At the national level, students participate in the National Assessment of Educational Progress (NAEP). At the international level, U.S. students participate in the Trends in International Mathematics and Science Study (TIMSS), Progress in International Reading Literacy Study (PIRLS), and Program for International Student Assessment (PISA).

Although there are some similarities between statewide, national, and international assessments, they differ in purpose and level of reporting. For example, the purpose of statewide assessments is primarily to inform statewide accountability systems and provide information on individual achievement. By contrast, the purpose of large-scale assessments is to highlight achievement gaps, track national progress over time, compare achievement within the United States, and compare U.S. achievement to that of other countries. Results of these assessments are not reported for individuals.

National Assessments: The NAEP is a series of assessments measuring achievement in various content areas. The long-term trends NAEP (LTT NAEP) has tracked achievement since the 1970s and has remained relatively unchanged. The main NAEP assessment has tracked achievement since the 1990s and changes periodically to reflect changes in school curricula. The main NAEP has three levels: national, state, and Trial Urban District Assessment (TUDA). States that receive Title I-A funding under the ESEA are required to participate in biennial state NAEP assessments in reading and mathematics for 4th and 8th grade. Results from the 2017 main NAEP show a small but significant increase in 8th grade reading since 2015. There were no significant changes in 4th grade reading, 4th grade mathematics, or 8th grade mathematics since 2015. Longer term, however, average reading and mathematics scores have increased significantly since the initial administrations in the 1990s.

International Assessments: The United States participates in three international assessments: TIMSS, PIRLS, and PISA. TIMSS is an assessment of mathematics and science for 8th grade students. PIRLS is an assessment of reading literacy for 4th grade students. PISA is an assessment of reading literacy, mathematics literacy, and science literacy for 15 year old students. In general, U.S. students have made statistically significant gains since the initial administrations of international assessments; however, achievement did not consistently increase in the most recent administrations of international assessments.

Issues of Interpretation of National and International Assessments: Results of national and international assessments are difficult to interpret. One challenge is processing the large amount of data. Another is understanding the difference between statistical significance and educational significance. Reporting statistical significance is standard practice in research, but it does not convey the magnitude of a difference and its associated educational significance. Another issue is the tendency to focus narrowly on one assessment at one point in time. A narrow focus may not provide the appropriate context to interpret results accurately. International assessment results may also be affected by socioeconomic considerations within and across countries.

Comparing Results Across Assessments: Comparing results across national and international assessments can be challenging. Each assessment was created for a unique purpose by different groups of stakeholders, which makes direct comparisons difficult. There are a number of issues to consider when evaluating U.S. students' performance across assessments. For example, consideration must be given to the differences in (1) the degree of alignment of content standards and assessments, (2) the target population being assessed, (3) the voluntary nature of student participation, (4) the participating education systems, (5) the scale of the assessment, and (6) the precision of measurement for each assessment.

Overview

Assessing the achievement of students in elementary and secondary schools and the nation's educational progress is fundamental to informing education policy approaches. Congressional interest in this area includes and extends beyond the annual assessments administered by states to comply with the educational accountability requirements of Title I-A of the Elementary and Secondary Education Act (ESEA). Congressional interest in testing also encompasses a national assessment program, authorized by the National Assessment of Educational Progress Assessment Act (NAEPAA; Title III, Section 303 of P.L. 107-279), and participation in international assessment programs, authorized by the Education Sciences Reform Act (ESRA; P.L. 107-279, Section 153(a)(6)). At the national level, students participate in the National Assessment of Educational Progress (NAEP). At the international level, U.S. students participate in the Trends in International Mathematics and Science Study (TIMSS), Progress in International Reading Literacy Study (PIRLS), and Program for International Student Assessment (PISA).1

When national and international assessment results are released, there is a tendency to take the results of one assessment and present them as a snapshot of U.S. student achievement. The focus on one set of assessment outcomes may result in a narrow and possibly misleading view of overall student achievement. The primary purpose of this report is to provide background and context for the interpretation of national and international assessment scores so that results can be interpreted appropriately over time and across multiple assessments. Other purposes of this report are to describe specific national and international assessments, describe the recent results of these assessments, and clarify specific issues regarding the interpretation of assessment scores that explain the achievement of U.S. students.

Introduction to Large-Scale Assessments

National and international assessments are large-scale assessments of educational progress. While some may also consider statewide assessments "large-scale," for the purposes of this report "large-scale assessments" refers only to national and international assessments. These assessments differ from statewide and other assessments in several important ways. First, large-scale assessments have different purposes than smaller-scale assessments. Second, there are different participation requirements and sampling procedures. And, third, there are differences in the ways scores are typically reported for large-scale assessments versus smaller-scale assessments. This section of the report discusses some of the major differences between large-scale and other, smaller-scale assessments, such as state and local assessments.

Purposes of Large-Scale Assessments

The primary purposes of large-scale assessments are to highlight achievement gaps, track national progress over time, compare student achievement within the United States, and compare U.S. academic performance to the performance of other countries. Unlike statewide assessments that evaluate schools and districts, large-scale assessment results generally cannot be connected to individual students, schools, or districts.2 Results are typically reported at the national or state levels.

Results from large-scale assessment that are reported at the national or state levels are well suited for broad-based analyses of achievement gaps in the United States. The "achievement gap" refers to differences in educational performance across subgroups of U.S. students. The most commonly reported achievement gaps are those that highlight differences by race, ethnicity, socioeconomic status, disability status, and gender.

Results reported at the national and state level are also well suited to track U.S. progress over time. Statewide assessments tend to change periodically depending on several factors, including changes in state and federal legislative requirements, in the assessments administered, and in the vendors that assist states with assessment development. By contrast, national and international assessments have remained relatively stable over time. Due to this stability, the results are easier to interpret from year to year because they are more of a direct comparison. Some national assessment programs date back to the 1960s and allow for a broader view of educational progress than statewide assessments.

Results reported at the national level on international assessments are uniquely suited to compare U.S. academic performance to the performance of other countries. Statewide assessments and national assessments cannot be used for this purpose. The United States has participated in international assessments since the 1960s.3 Depending on the type of international assessment and year of administration, U.S. student performance has been compared to student performance in approximately 30 to 70 countries. Furthermore, some international assessments have been benchmarked against U.S. student performance in certain states.4

Participation

Participation requirements for statewide, national, and international assessments differ. States are required by the ESEA to assess all students in statewide assessment programs, including students with disabilities and English Learners (ELs).5 In assessment terminology, states are required to assess the "universe" of students (i.e., all students) in statewide assessments.

States that receive Title I-A ESEA funding (currently, all states) are also required to participate in biennial NAEP assessments of reading and mathematics for the 4th and 8th grades. In contrast to statewide assessments, however, states are required to administer national assessments to a subset of students. In assessment terminology, states assess a "representative sample" of students.6 Additionally, states may not be required to administer the assessments to certain students with disabilities and ELs if these students require an accommodation that is not permitted on the national assessments.7 Although states are required to participate in these assessments, individual participation of students remains voluntary.

Unlike statewide assessments and the NAEP assessment, states are not required to participate in many national assessments and or any international assessments. Participation is voluntary at both the state and student levels. If a state agrees to participate, each international assessment has a different method for selecting students. Like national assessments, international assessments test a "representative sample" of students.

Score Reporting

Score reporting for large-scale and smaller-scale assessments has some noteworthy similarities and differences. Statewide, national, and international assessments can all report student achievement as scaled scores.8 A scaled score is a standardized score that exists among a common scale that can be used to make comparisons across students, across subgroups of students, and over time on a given assessment.

Educational assessment often reports scaled scores instead of raw scores or percent correct. There are several reasons that scaled scores are preferable. Large-scale assessment programs usually have multiple forms of the same test to control for student exposure to assessment items.9 As such, students take multiple forms of the same test. Although the multiple forms of the same assessment are similar, there are inevitably differences in difficulty of certain items across forms. By creating a scaled score, the scores of students or groups of students can be directly compared, even when different forms of varying difficulty were administered.10

Although all these assessments use scaled scores, they all have a different scale. For example, some scales from national assessments are from 0-300 while scales from international assessments are typically 0-1000.11 Therefore, scaled scores are not directly comparable. When a scaled score is reported in isolation, it may be difficult to determine how well a student or group of students performed. To provide a context for grade-level or age-level expectations, large-scale assessments (and some smaller-scale assessments, such as the statewide assessments required by Title I-A of the ESEA) use performance standards.

A performance standard is an agreed upon definition of a certain level of performance in a content area that is expressed in terms of a cut score (i.e., basic, proficient, advanced) for a specific assessment. Although statewide, national, and international assessments use performance standards and may even use the same terminology (e.g., basic, proficient, advanced) to describe one or more of their performance standards, they do not use the same performance standards. For each assessment, there may be different cut scores and different definitions of each performance level. A student who is "proficient" on a statewide assessment may not be "proficient" on a national assessment and vice versa. In addition, within an individual assessment, the range of actual student performance within the "proficient" performance standard, for example, will include students whose assessment results are just high enough to be considered proficient as well as students whose assessment results almost put them into the next highest performance standard level (e.g., advanced). In this example, the "proficient" performance standard does not distinguish between a student who is just barely proficient and one who is nearly advanced. Both students would be considered to be proficient.

International assessments usually report scores differently than statewide and national assessments. Although international assessments do report a scaled score and sometimes a performance standard, they have additional ways of reporting achievement. Performance on international assessments is also reported as a rank or as a score relative to an "international average" score. Rank and international average scores tend to change from one assessment administration to the next, depending on the countries that participate in the assessment.

Perhaps the most important distinction between statewide and large-scale assessments is the level of reporting. Statewide assessments are administered so that scores can be reported for individual students. Because statewide assessment programs test the universe of students and each student takes all the assessment items, each student has his or her own scaled score and performance standard level (e.g., basic, proficient, advanced). In large-scale assessments, a representative sample of students is tested, and each student may only take a portion of the assessment items. This type of sampling procedure allows scores to be reported for groups of students but not individual students. Large-scale assessments, therefore, report scores for groups of students with similar demographic characteristics, groups within a large district or state, or groups within a country.

Types of Large-Scale Assessments

Large-scale assessments are standardized assessments that are administered nationwide or worldwide. U.S. students currently participate in two types of large-scale assessments: national assessments and international assessments.

The United States administers a series of national assessments called the National Assessment of Educational Progress. Although NAEP is described as a single assessment, it is actually a series of two assessment programs: the main NAEP and the long-term trends (LTT) NAEP. The main NAEP program consists of three subprograms: national NAEP, state NAEP, and the Trial Urban District Assessment (TUDA). The United States also participates in three major international assessments: the Trends in International Mathematics and Science Study (TIMSS), the Progress in International Reading Literacy Study (PIRLS), and the Program for International Student Assessment (PISA).

Table 1 provides a quick reference guide to the characteristics of the large-scale assessments discussed in this report. Appendix A provides additional information on large-scale assessments, such as authorization and oversight provisions.

|

Assessment Title |

Content Areas |

Grade Levels or Ages |

Student Participation |

Initial Assessment |

Frequency of Administration |

|

National Assessment of Educational Progress (NAEP)a |

|||||

|

National NAEP |

Reading, mathematics, science, writing, the arts, civics, economics, geography, U.S. history, and technology and engineering literacy (TEL)b |

4th grade, 8th grade, and 12th grade (less frequently) |

Representative sample selected Voluntary participation for states and students |

1969 |

Variablec |

|

State NAEP |

Reading, mathematics, science, and writing |

4th grade, 8th grade, and 12th grade (less frequently) |

Representative sample selected Required participation in 4th and 8th grade reading and mathematics assessments for states that receive ESEA, Title I-A funding Voluntary participation for students |

1990 |

Every 2 yearsc |

|

Trial Urban District Assessment (TUDA) NAEP |

Reading, mathematics, science, and writing |

4th grade, 8th grade, and 12th grade (less frequently) |

Representative sample selected Voluntary participation for districts |

2003d |

Every 2 years |

|

Long-Term Trends (LTT) NAEP |

Reading and mathematicse |

9-, 13-, and 17-year olds |

Representative sample selected Voluntary participation for states and students |

1969 |

LTT NAEP is administered "regularly" but the frequency of administration has ranged from about every 2 to 12 years. |

|

International Assessments |

|||||

|

Program for International Student Assessment (PISA) |

Reading, mathematics, and science literacy |

15 year olds |

Representative sample selected Voluntary participation for countries and students |

2000 |

Every 3 years |

|

Program for International Reading Literacy Study (PIRLS) |

Reading, school and teacher practices related to instruction, students' attitudes towards reading, and reading habits |

4th grade |

Representative sample selected Voluntary participation for countries and students |

2001 |

Every 5 years |

|

Trends in International Mathematics and Science Study (TIMSS) |

Mathematics and sciencef |

4th grade, 8th grade, and 12th grade |

Representative sample selected Voluntary participation for countries and students |

1995 |

Every 4 years |

Source: CRS summary of national and international assessments, available from the U.S. Department of Education (ED).

a. The NAEP has two assessment programs: main NAEP and LTT NAEP. The main NAEP has three subprograms: national NAEP, state NAEP, and TUDA NAEP. The main NAEP subprograms have significant overlap. The LTT NAEP differs from the main NAEP in its origin, frequency, and content areas assessed. For more information, see https://nces.ed.gov/nationsreportcard/about/ltt_main_diff.aspx.

b. The national NAEP does not assess each content area at each administration. For more information on the content areas assessed by year, see the NAEP assessment schedule: https://nces.ed.gov/nationsreportcard/about/assessmentsched.aspx.

c. The state NAEP does not assess each content area at each administration. Although state NAEP is typically assessed every two years, it was assessed in both 2002 and 2003. At this time, the TUDA program was in a trial period, and the timing of assessments were being coordinated across programs. For more information, see the NAEP assessment schedule: https://nces.ed.gov/nationsreportcard/about/assessmentsched.aspx.

d. The TUDA does not assess each content area at each administration. The initial TUDA was administered in 2002 in the content areas of reading and writing. The first TUDA administration to assess both reading and mathematics was in 2003. TUDA reading and mathematics has been assessed every two years since 2003. For more information, see the NAEP assessment schedule: https://nces.ed.gov/nationsreportcard/about/assessmentsched.aspx.

e. Historically, the LTT NAEP assessed a wider variety of content areas; however, content areas other than mathematics and reading have not been assessed since 1999 because NAGBE changed its policy on the LTT NAEP. For more information, see the National Assessment Governing Board Long-Term Trends Policy Statement, adopted May 18, 2002, available at https://www.nagb.gov/content/nagb/assets/documents/policies/Long-term%20Trend.pdf.

f. Specific mathematics and science skills depend on the grade level assessed. For more information, see https://nces.ed.gov/timss/faq.asp#7.

National Assessments: The National Assessment of Educational Progress

The NAEP is referred to as the "Nation's Report Card" because it is the only nationally representative assessment of what America's students know and can do in various content areas.12 The original NAEP program began in 1969 and the first assessment was administered in 1971. The National Assessment of Educational Progress Authorization Act authorizes the NAEP.13 The Commissioner for the National Center for Education Statistics (NCES) in the U.S. Department of Education (ED) is responsible for the administration of the NAEP. The Secretary of Education appoints members to the National Assessment Governing Board (NAGB) to set the policy for NAEP administration. The Commissioner of NCES and NAGB meet regularly to coordinate activities.

In the first two decades of NAEP administration, there was no "main NAEP" program or "LTT NAEP" program. Beginning in 1990, however, the NAEP program evolved into two separate assessment programs.

Main NAEP Program

The main NAEP program was first administered in 1990. In 1996, NAGB14 issued a policy statement to redesign the NAEP.15 The most noteworthy change was splitting the NAEP into two "unconnected" assessment programs. NAGB proposed a "main NAEP" program that would become the primary way to measure reading, mathematics, science and writing. NAGB recognized, however, that the nation's curricula would continue to change over time and there would still be value in tracking long-term trends with a stable assessment. NAGB, therefore, proposed the LTT NAEP assessment would be continued, though less frequently, to track trends over time. The main NAEP assessment framework was expected to change about every decade to account for changes in the nation's curricula while the LTT NAEP assessment framework was set to be stable over time.

Another noteworthy change of the 1996 policy statement was the development of performance standards for the main NAEP. Although the original NAEP had numeric performance levels (i.e., 150, 200, 250, 300, 350), there were no descriptive performance standards associated with these levels (i.e., basic, proficient, and advanced). Performance standards were introduced with the administration of the main NAEP in 1990. The standards were subsequently amended several times over the next five years. In 1996, NAGB committed to improving the performance standards and recommended the continued use of performance standards. Because of this policy shift, the main NAEP and its subprograms continue to use basic, proficient, and advanced as their performance levels.16

The main NAEP has evolved over time and split into several subprograms: national, state, and TUDA. The national NAEP assesses the widest range of subject areas. For the national NAEP, a sample is selected from public and private schools and students, creating a representative sample across the nation.

The state NAEP program17 began as a trial assessment program in 1990 and currently assesses four subject areas: reading, mathematics, writing, and science. In 1996, the state NAEP program was no longer considered a trial and it included 43 states and jurisdictions.18 In 2001, there was a significant change to the state NAEP program due to the reauthorization of the ESEA by the No Child Left Behind Act (NCLB; P.L. 107-110). The NCLB required states that receive Title I-A funding to participate in biennial NAEP assessments of reading and mathematics in 4th and 8th grades, provided that the Secretary of Education pays for the testing. Although states receiving funding are required to participate, only a sample of schools, and a sample of students within those schools, are selected from each state to participate, creating a representative sample of students within each participating state. Participation is voluntary at the individual level. The assessments administered in the state NAEP program are exactly the same as the national NAEP assessments. The latest reauthorization of the ESEA retained the requirement that states receiving Title I-A funding to participate in these assessments. In 2017, the most recent administration of the main NAEP, 585,000 4th and 8th grade students participated.

The TUDA program assesses four subject areas: reading, mathematics, writing, and science. The TUDA19 began in 2002 with six participating districts.20 Participation has grown with each administration, and in 2017, 27 districts voluntarily participated. A total of 66,500 students participated in the 2017 mathematics assessment and 65,300 students participated in the 2017 reading assessment. The assessments administered in the districts are exactly the same as the national and state NAEP assessments.

LTT NAEP Program

Although it was not called the LTT NAEP program at the time, the LTT NAEP program is typically considered to date back the origin of NAEP in 1969. Since it was initially the only NAEP assessment, the LTT NAEP assessment items changed over time throughout the 1970s and 1980s to reflect changes in the nation's curricula. Since 1990, however, the LTT NAEP program has remained unchanged. This continuity of assessment items over time is what allows the LTT to accurately track long-term trends. In early administrations of the LTT NAEP program, a wide range of content areas was assessed, including reading, mathematics, science, writing, citizenship, literature, social studies, music, art, and several areas of basic skills. In 1999, due to the development and administration of the main NAEP program, the LTT began to assess only reading and mathematics.21

The LTT NAEP program currently assesses 9-, 13-, and 17-year old students in reading and mathematics. The LTT NAEP was most recently administered in 2012 to approximately 53,000 students. While previously administered about every four years, the next LTT NAEP administration is scheduled for 2024.22

U.S. Performance on the National Assessment of Educational Progress

ED provides reports and data tools to explore the results of the NAEP. For example, ED releases publications and multimedia materials for educators, researchers, news organizations, and the public.23 ED also provides access to the NAEP Data Explorer, which allows the public to create customizable tables and graphics by state, district, content area, etc.24 The NAEP Data Explorer can also be used to conduct basic research analyses of NAEP data, such as significance testing, gap analysis, and regression analysis.

Due to the amount of information provided by NAEP publications and the NAEP Data Explorer, it is not feasible to cover all NAEP results in this report. This discussion of NAEP results presented here focuses on major trends in performance over time as well as some recent trends. These trends are examined in terms of average scores across groups and average scores across groups of different achievement levels (i.e., 10th, 25th, 50th, 75th, and 90th percentiles of achievement). Average trends across different achievement levels are often examined to determine whether the improvement (or lack thereof) can be attributed to higher-achieving students, lower-achieving students, or all students. Additionally, achievement gaps over time that are reported in NAEP publications are also presented. Results discussed herein are used in subsequent sections of this report to highlight some of the issues of interpretation in large-scale assessments. For links to more comprehensive results for NAEP, see Appendix B.

Highlights from 2017 Main NAEP

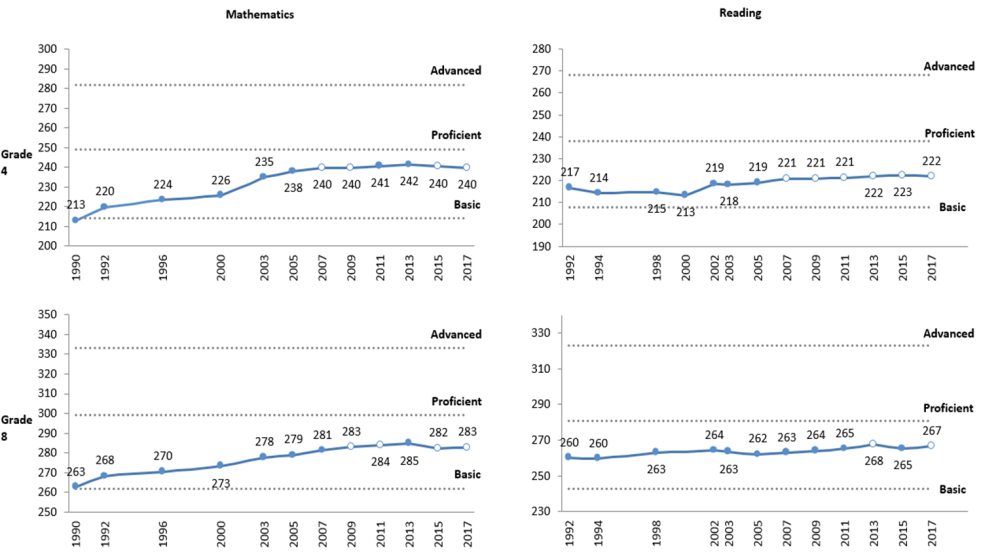

The most recent administration of the main NAEP was 2017. Figure 1 shows the mathematics and reading results for 4th and 8th graders.

- Average scores have increased significantly in mathematics and reading performance for 4th and 8th grade on the main NAEP (since 1990 and 1992, respectively).

- Compared to the 2015 administration of the NAEP, average mathematics scores did not change significantly for 4th or 8th grade. Average reading scores did not change significantly for 4th grade students, but there was a small, statistically significant improvement for 8th grade students.25

|

Statistical Significance in Assessment Score Results Reported in Figures The figures in this section of the report present trend lines of data points. The differences between certain assessment results are tested for statistical significance. All significance tests are relative to the last year of assessment administration included in the figure and represented by an open data point. Any solid data point along the trend line indicates a statistically significant difference between that year's assessment results and the assessment results for the last year of assessment administration included in the figure. Any open data point along the trend line indicates a statistically insignificant difference between that year's assessment results and the assessment results for the last year of assessment administration included in the figure. |

|

|

Source: U.S. Department of Education, 2017 NAEP Mathematics & Reading Assessments: Highlighted Results at Grades 4 and 8 for the Nation, States, and Districts, National Scores at a Glance, https://www.nationsreportcard.gov/reading_math_2017_highlights/. Notes: All significance tests are relative to the final year of administration. All significance tests are relative to the last year of assessment administration included in the figure and represented by an open data point. Any solid data point along the trend line indicates a statistically significant difference between that year's assessment results and the assessment results for the last year of assessment administration included in the figure. Any open data point along the trend line indicates a statistically insignificant difference between that year's assessment results and the assessment results for the last year of assessment administration included in the figure. For more information on NAEP performance standards, see https://nces.ed.gov/nationsreportcard/tdw/analysis/describing_achiev.aspx. |

NAEP uses three performance standard levels to describe achievement: basic, proficient, and advanced.26 For all grades and subject areas, U.S. students' average performance in 2017 falls between the basic and proficient levels. For the NAEP assessment, the proficient level of achievement is not considered "grade-level work." The proficient level is considered mastery of challenging subject matter, including the application of knowledge and demonstration of analytical skills.27

- For 4th grade mathematics, 40% of students scored at or above the proficient level which is not significantly different than the previous administration in 2015 (40%) but significantly higher than the initial administration in 1990 (13%).28

- For 8th grade mathematics, 34% of students scored at or above the proficient level which is not significantly different than the previous administration in 2015 (33%) but significantly higher than the initial administration in 1990 (15%).29

- For 4th grade reading, 37% of students scored at or above the proficient level which is not significantly different than the previous administration in 2015 (36%) but significantly higher than the initial administration in 1992 (29%).30

- For 8th grade reading, 36% of students scored at or above the proficient level, which is significantly higher than the previous administration in 2015 (34%) and the initial administration in 1992 (29%).31

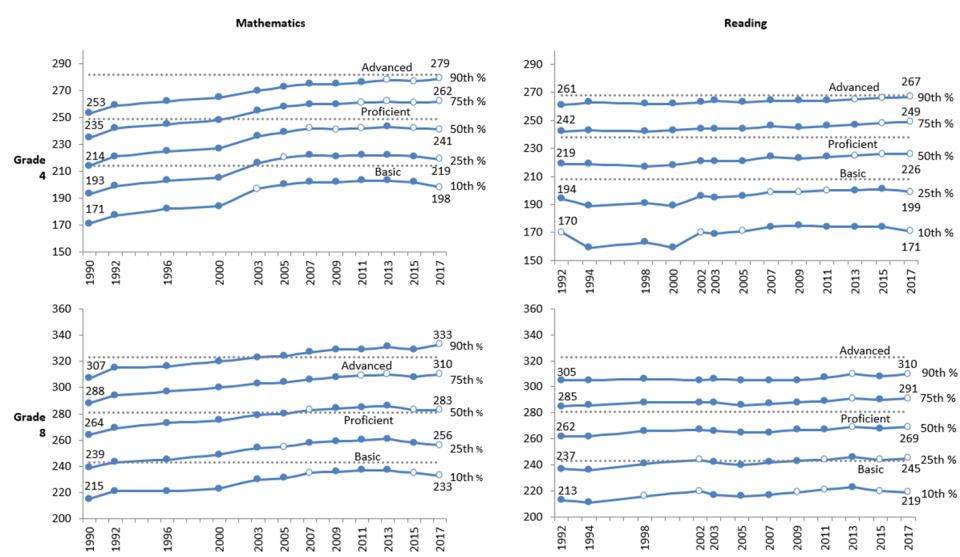

Main NAEP scores are also reported at five different percentiles to track the performance of lower-achieving students, average-achieving students, and higher-achieving students over time (i.e., 10th, 25th, 50th, 75th, and 90th percentiles). Figure 2 shows the progress over time for students achieving at various percentiles.

- Significant gains on the main NAEP assessment in the last several years are driven by students who are in higher-achieving percentile groups.

- 8th grade students in the 75th and 90th percentile groups made significant gains in mathematics and reading since 2015.

- 8th grade students in the 25th percentile group scored significantly lower in mathematics since 2015.

- 4th grade students in the 25th and 10th percentile groups scored significantly lower in mathematics and reading since 2015.32

|

Figure 2. Average Main NAEP Performance, 1990-2017, by Percentile of Achievement |

|

|

Source: U.S. Department of Education, 2017 NAEP Mathematics & Reading Assessments: Highlighted Results at Grades 4 and 8 for the Nation, States, and Districts, National Scores at a Glance, https://www.nationsreportcard.gov/reading_math_2017_highlights/. Notes: All significance tests are relative to the last year of assessment administration included in the figure and represented by an open data point. Any solid data point along the trend line indicates a statistically significant difference between that year's assessment results and the assessment results for the last year of assessment administration included in the figure. Any open data point along the trend line indicates a statistically insignificant difference between that year's assessment results and the assessment results for the last year of assessment administration included in the figure. For more information on NAEP performance standards, see https://nces.ed.gov/nationsreportcard/tdw/analysis/describing_achiev.aspx. |

Highlights from LTT NAEP

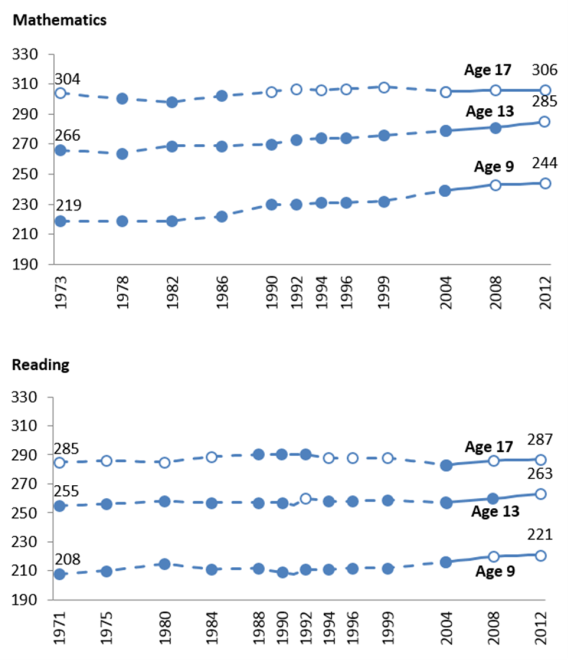

Figure 3 shows the trend in average NAEP mathematics and reading performance on the LTT from the early 1970s until the most recent assessment in 2012.

- The LTT NAEP assessment corroborates the gains observed on the main NAEP for 9- and 13-year old students.33

- In both mathematics and reading, 9- and 13-year old students have shown significant gains over time.34

|

Figure 3. LTT NAEP Average Mathematics and Reading Scores |

|

|

Source: U.S. Department of Education, NAEP 2012: Trends in Academic Progress, NCES 2013-456, 2013, https://nces.ed.gov/nationsreportcard/subject/publications/main2012/pdf/2013456.pdf. Notes: All significance tests are relative to the last year of assessment administration included in the figure and represented by an open data point. Any solid data point along the trend line indicates a statistically significant difference between that year's assessment results and the assessment results for the last year of assessment administration included in the figure. Any open data point along the trend line indicates a statistically insignificant difference between that year's assessment results and the assessment results for the last year of assessment administration included in the figure. |

Achievement Gaps Reported by Main NAEP

Achievement gaps occur when one subgroup of students significantly outperforms another subgroup of students on an assessment of academic achievement. In the United States, there have historically been observed achievement gaps by gender, race, ethnicity, socioeconomic status, and disability status. NAEP results have highlighted various achievement gaps and tracked them over time. The following section reports selected achievement gaps that are often highlighted in publications presented by ED. This section does not, however, examine all possible achievement gaps.35

The 2017 NAEP results reveal that significant achievement gaps exist by gender, race, ethnicity, socioeconomic status, and school factors. Table 2 shows the size of the most recent gaps. The largest achievement gaps are typically by race, ethnicity and socioeconomic status. For the 2017 NAEP results,

- the largest significant gap reported is that between white students and black students in 8th grade mathematics (32 points),

- the second largest significant gap reported is that between students not eligible for the National Student Lunch Program (NSLP) and students who are eligible for the program in 8th grade mathematics,36 and

- the smallest significant achievement gaps reported are between male students and female students in mathematics.37

|

Mathematics |

Reading |

|||

|

2017 Score Gap |

4th Grade |

8th Grade |

4th Grade |

8th Grade |

|

Male – Female |

2 points |

1 point |

-6 points |

-10 points |

|

White – Black |

25 points |

32 points |

26 points |

25 points |

|

White – Hispanic |

19 points |

24 points |

23 points |

19 points |

|

Asian/Pacific Islander – White |

10 points |

17 points |

7 points |

7 points |

|

Not Eligible for NSLP – Eligible for NSLP |

24 points |

29 points |

29 points |

24 points |

|

Catholic – Public |

6 points |

12 points |

14 points |

18 points |

|

Other Non-charter Public Schools – Charter Schools |

4 points |

1 pointa |

No differenceb |

No differenceb |

Source: U.S. Department of Education, 2017 NAEP Mathematics & Reading Assessments: Highlighted Results at Grades 4 and 8 for the Nation, States, and Districts, National Scores at a Glance, https://www.nationsreportcard.gov/reading_math_2017_highlights/.

Notes: All achievement gaps included in the table are statistically significant, unless otherwise noted.

Some achievement gaps have changed since the early 1990s. As reported by ED, some have increased significantly over time.

- The largest increase in the achievement gap over time is the difference between white students and Asian/Pacific Islander students in 4th grade reading (15 points) and 8th grade mathematics (12 points). In 4th grade reading, white students outperformed Asian/Pacific Islander students in 1992 but are now significantly outperformed by them. In 8th grade mathematics, Asian/Pacific Islander students outperformed white students in 1990 and the gap has become significantly larger over time.38

Other achievement gaps have decreased significantly over time.

- The gap between white students and black students has decreased in both 4th grade mathematics (7 points) and 4th grade reading (6 points).

- The gap between white students and Hispanic students has decreased in 8th grade reading (7 points).39

Achievement Gaps Reported by LTT NAEP

The LTT NAEP also tracks achievement gaps over time. In general achievement gaps have significantly narrowed or remained unchanged. For example, the gap between white students and black students in reading at age 9 has narrowed since 1971. While the average score for white students increased 15 points, the average score for black students increased 36 points, leading to the narrowing of the achievement gap.40 None of the measured achievement gaps in the LTT NAEP have increased significantly over time.

International Assessments

The United States regularly participates in three international assessments: TIMSS, PIRLS, and PISA. While U.S. students have participated in international assessments since the 1960s, the modern era of international assessments began in the mid-1990s.41 This report focuses on international assessment results that highlight U.S. student performance over time and in relation to other countries. Results discussed herein are used in subsequent sections of this report to highlight some of the issues of interpretation in large-scale assessments. For links to more comprehensive results for the international assessments, see Appendix B.

TIMSS

The TIMSS is an international comparative study that is designed to measure mathematics and science achievement in 4th and 8th grades. The TIMSS is designed to measure "school-based learning," and is designed to be broadly aligned with mathematics and science curricula in participating education systems (i.e., countries and some sub-national jurisdictions).42 The United States has participated in the TIMSS every four years since 1995. Less often, 12th grade students participate in the TIMSS Advanced program, which measures advanced mathematics and physics.43 In 2015, approximately 20,250 U.S. students participated in TIMSS and about 5,900 U.S. students participated in TIMSS Advanced. The United States was one of over 60 education systems to participate in TIMSS and one of 9 to participate in the TIMSS Advanced program.44 All participation in TIMSS is voluntary. The next TIMSS administration is scheduled for 2019. No date has been announced for the next TIMSS Advanced administration.

The TIMSS is conducted in the United States under the authority of international assessment activities.45 TIMSS assessments in the United States are administered by the Commissioner of NCES within the International Activities Program. The International Association for the Evaluation of Educational Achievement (IEA) coordinates TIMSS and TIMSS Advanced internationally.

U.S. Performance on TIMSS in Relation to Other Countries46

TIMSS reports results separately for mathematics and science. U.S. results for TIMSS mathematics are as follows:47

- In 2015, 4th grade, the United States scored significantly lower than 10 education systems, significantly higher than 34 education systems, and not significantly different than 9 education systems.48

- In 8th grade, the United States scored significantly lower than 8 education systems, significantly higher than 24 education systems, and not significantly different than 10 education systems.49

U.S. results for TIMSS science are as follows:

- In 2015, 4th grade, the United States scored significantly lower than 7 education systems, significantly higher than 38 education systems, and not significantly different than seven education systems.50

- In 8th grade, the United States scored significantly lower than 7 education systems, significantly higher than 26 education systems, and not significantly different than nine education systems.51

U.S. Performance on TIMSS over Time by Achievement Percentiles

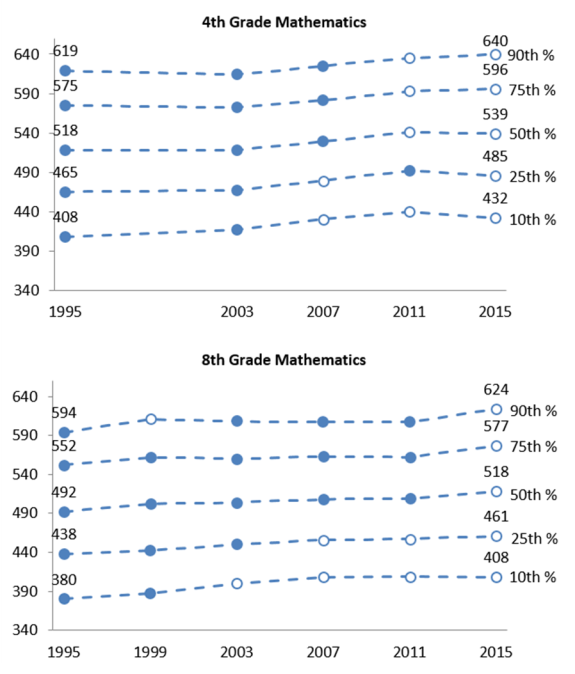

TIMSS reports results over time by achievement level for U.S. students (i.e., 10th percentile, 25th percentile, 50th percentile, 75th percentile, and 90th percentile). Figure 4 shows the results for 4th and 8th grade mathematics for U.S. students.

- Increases in achievement for 4th grade mathematics may be driven by the performance of average or above average groups, however, the increases are not statistically significant.52

- Performance on TIMSS increased for all achievement levels on 8th grade mathematics, however, the increases were significant for average and above average groups while increases were not significant for below average groups.

|

Figure 4. Trends in U.S. 4th and 8th Grade TIMSS Average |

|

|

Source: U.S. Department of Education, National Center for Education Statistics, Highlights From TIMSS and TIMSS Advanced 2015, NCES 2017-002, November 2016, https://nces.ed.gov/pubs2017/2017002.pdf. Notes: All significance tests are relative to the last year of assessment administration included in the figure and represented by an open data point. Any solid data point along the trend line indicates a statistically significant difference between that year's assessment results and the assessment results for the last year of assessment administration included in the figure. Any open data point along the trend line indicates a statistically insignificant difference between that year's assessment results and the assessment results for the last year of assessment administration included in the figure. |

Science results for U.S. students are also reported over time and by achievement level. Figure 5 shows results for science performance for students in 4th and 8th grade over time and by achievement level.

- Science achievement in 4th and 8th grades has been generally flat since the 2011 administration of TIMSS.

- There have been some significant increases in 4th and 8th grade science achievement since the 2007 administration of TIMSS for students whose achievement falls between the 25th and 75th percentiles.

|

Figure 5. Trends in U.S. 4th and 8th Grade TIMSS Average |

|

Source: U.S. Department of Education, National Center for Education Statistics, Highlights From TIMSS and TIMSS Advanced 2015, NCES 2017-002, November 2016, https://nces.ed.gov/pubs2017/2017002.pdf.

PIRLS

PIRLS is an international comparative study of 4th grade students in reading literacy. PIRLS assesses reading literacy at 4th grade because this is typically considered a developmental stage of learning where students shift from learning to read to reading to learn. PIRLS is not an assessment of word reading ability but rather an assessment of the purposes for reading, processes of comprehension, and reading behavior and attitudes. For young students, reading generally has two purposes both in and out of school: (1) reading for literacy experience, and (2) reading to acquire and use information.

The United States has participated in PIRLS every five years since 2001. The next assessment of PIRLS will be administered in 2021. In 2016, the United States also participated in the first administration of ePIRLS, a computer-based assessment of online reading. ePIRLS is designed to measure informational reading comprehension skills in an online environment.53 In 2016, approximately 4,500 U.S. students participated in PIRLS and an additional 4,000 students participated in ePIRLS. The United States was one of 61 education systems to participate in PIRLS and one of 14 to participate in ePIRLS. All participation in PIRLS and ePIRLS is voluntary.

PIRLS is conducted in the United States under the authority of international assessment activities.54 The PIRLS and ePIRLS assessments in the United States are administered by the Commissioner of NCES within the International Activities Program. Like TIMSS, the IEA coordinates PIRLS internationally.

U.S. Performance on PIRLS and ePIRLS in Relation to Other Countries

Results for PIRLS and ePIRLS are reported separately.55

- For PIRLS, the United States scored significantly lower than 12 education systems, significantly higher than 30 education systems, and not significantly different than 15 education systems.56

- For ePIRLS, the United States scored significantly lower than 3 education systems, significantly higher than 10 education systems, and not significantly different than 2 education systems.57

U.S. Performance on PIRLS over Time by Achievement Levels

PIRLS reports results for U.S. students over time by achievement level (i.e., 10th percentile, 25th percentile, 50th percentile, 75th percentile, and 90th percentile).58 Figure 6 shows the results for 4th grade reading achievement by achievement level across time.

- In general, U.S. student performance from 2001 to 2016 was relatively flat.

|

Figure 6. Trends in U.S. 4th Grade Average PIRLS |

|

|

Source: U.S. Department of Education, National Center for Education Statistics, Reading Achievement of U.S. Fourth-Grade Students in an International Context, NCES 2018-017, December 2017, https://nces.ed.gov/pubs2018/2018017.pdf. Notes: All significance tests are relative to the last year of assessment administration included in the figure and represented by an open data point. Any solid data point along the trend line indicates a statistically significant difference between that year's assessment results and the assessment results for the last year of assessment administration included in the figure. Any open data point along the trend line indicates a statistically insignificant difference between that year's assessment results and the assessment results for the last year of assessment administration included in the figure. |

PISA

PISA is an international comparative study of 15-year-old students in the content areas of science, reading, and mathematics "literacy." It aims to measure the achievement of students at the end of their compulsory education.59 The PISA is not designed to measure "school-based learning" and is not designed to be aligned with academic content standards. Instead, PISA intends to measure students' preparation for life and focuses on science, reading, and mathematics problems within a real-life context.60 The United States has participated in PISA every three years since 2000. In 2015, approximately 6,000 U.S. students participated in PISA. The United States was one of 72 countries and economies to participate. All participation is voluntary. PISA 2018 was administered in the fall of 2018, and results are tentatively scheduled to be released in December 2019.61

PISA is conducted under the authority of international assessment activities.62 The PISA assessment in the United States is administered by the Commissioner of NCES within the International Activities Program. Unlike TIMSS and PIRLS, the international coordination of the PISA is conducted by the Organisation for Economic Cooperation and Development (OECD), an intergovernmental organization of industrialized countries.

U.S. Performance on PISA in Relation to Other Countries

PISA reports results separately for reading literacy, mathematics literacy, and science literacy.63

- For reading literacy, in 2015, the United States scored significantly lower than 14 education systems, significantly higher than 42 education systems, and not significantly different than 13 education systems.64

- For mathematics literacy, in 2015, the United States scored significantly lower than 36 education systems, significantly higher than 28 education systems, and not significantly different than 5 education systems.65

- For science literacy, in 2015, the United States scored significantly lower than 18 education systems, significantly higher than 39 education systems, and not significantly different than 12 education systems.66

U.S. Performance on PISA Over Time

Unlike the NAEP and other international assessments, PISA does not track progress over time in the same way for different levels of achievement (e.g., 10th, 25th, 50th, 75th, and 90th percentiles). PISA does, however, track average performance over time. Table 3 shows average score changes for U.S. students in mathematics, reading, and science literacy:

- For mathematics literacy, the average score in 2015 was 11 points lower than the average score in 2012 and 17 points lower than the average score in 2009; however, the average score in 2015 was not measurably different than the average scores in 2003 and 2006.

- For reading literacy, the average score in 2015 was not measurably different than in previous years.

- For science literacy, the average score in 2015 were not measurably different than in previous years.

|

Average Score |

Change in Average Score |

||||||||

|

Subject |

2003 |

2006 |

2009 |

2012 |

2015 |

2015-2003 |

2015-2006 |

2015-2009 |

2015-2012 |

|

Mathematics |

483 |

474 |

487 |

481 |

470 |

No change |

No change |

Decreasea |

Decreasea |

|

Reading |

N/A |

N/A |

500 |

498 |

497 |

N/A |

N/A |

No change |

No change |

|

Science |

N/A |

489 |

502 |

497 |

496 |

N/A |

No change |

No change |

No change |

Source: U.S. Department of Education, National Center for Education Statistics, Performance of U.S. 15-Year-Old Students in Science, Reading, and Mathematics Literacy in an International Context, NCES 2017-048, December 2016, https://nces.ed.gov/pubs2017/2017048.pdf.

Issues of Interpretation in Large-Scale Assessments

Results of national and international assessments are difficult to interpret for a number of reasons. Perhaps the most difficult issue in the interpretation of large-scale assessments is processing the large volume of data presented in reports. The results provided in the previous section are a small fraction of what is available. These specific results were reported to provide a broad overview of the achievement of U.S. students across a wide range of assessments over time.

When large numbers of results are reported in national and international assessments, it can be challenging to compile assessment results across assessments to determine how well U.S. students are achieving over time and relative to other countries. The purpose of this section of the report is to present a few issues to consider when interpreting national and international assessments. This discussion is not intended to provide a comprehensive list of possible considerations; however, the key issues presented below are pervasive across large-scale assessments.

The "Significance" of Assessment Results

The concept of statistical significance is central to reporting assessment results. When states or countries are presented in a rank order, it is important to note whether differences in rank are statistically significant. For example, as reported in the TIMSS results above, in 4th grade mathematics, the United States scored lower than 10 education systems, higher than 34 education systems, and not measurably different than 9 education systems. When average scores are presented in a rank order, however, the United States is ranked number 15.67 Four education systems above the United States and five education systems below the United States had average scores that were not statistically significantly different from the United States. Strictly ranking average scores does not account for statistically insignificant differences between average scores.

Statistical significance is an important measure of whether a change is likely to be due to chance. Statistical significance, however, may not be the most important indicator of meaningful change. A statistically significant change in assessment score is a change that is unlikely to be due to chance. Statistical significance, however, is influenced by many factors. For the purposes of this discussion, the most relevant factor that influences statistical significance is sample size. The larger the sample size, the more likely a small change in assessment score will be statistically significant. Recall that national and international assessments sample tens of thousands or hundreds of thousands of U.S. students. Due to large samples, small increases or decreases in academic achievement may be statistically significant. For example, in the most recent NAEP administration, a two-point increase in 8th grade reading performance was statistically significant.

Statistical significance is not the same as educational significance. Educational significance is subjective and dependent on the educational context of the results. Statistical significance cannot determine the magnitude of the difference and whether or not it is of educational significance. For example, as reported above in the NAEP results, there is a statistically significant gap of one point between male and female students in 8th grade mathematics. There is also a statistically significant gap of 32 points between white and black students in 8th grade mathematics. While both gaps are statistically significant, the gap between white and black students may have more educational significance.

Some researchers argue that statistical significance can be misleading for policy purposes because a statistically significant result may be too small to warrant a change in practices or policies.68 Educational significance is more subjective and difficult to define when considering assessment results. One way educational researchers have tried to define educational significance or practical significance is by using an effect size.69 An effect size can better determine the magnitude of an effect, however, there is still no consensus on the magnitude of an effect size that is meaningful in all contexts.70

The Narrow Focus on One Assessment

When new national and international assessment results are released, there is a tendency to focus on a single assessment or a single result. A narrow focus on one assessment at one point in time, however, may not provide appropriate context for interpreting the results. Examining differences in results across assessments and trends over time can provide a more meaningful context for interpretation.

For example, PISA results show a statistically significant decrease in mathematics literacy scores from 2012 to 2015. When considered in isolation, this result may indicate that the mathematics achievement of 15-year-old U.S. students is declining. Consider, however, that 8th grade U.S. students made statistically significant gains in mathematics on TIMSS from 2011 to 2015 and showed no change in mathematics on the NAEP from 2015 to 2017. While conflicting results like these can be frustrating, it is important to consider them together as a body of evidence instead of isolated data points. There may be valid reasons that U.S. students' performance decreased on PISA and increased on TIMSS. For example, as discussed above, TIMSS measures more "school-based learning," and U.S. students have historically scored relatively higher on this assessment. Perhaps the content standards and curriculum in place in the United States are more aligned with content assessed by the TIMSS and less aligned with the content assessed by the PISA.

Another issue to consider is the trend over time. For example, although U.S. students' performance on PISA significantly decreased from 2009 to 2015, the 2015 score is not measurably different than the average scores in 2003 or 2006.71 While any significant decrease may be cause for concern, it is important to recognize that scores have not decreased significantly since the initial administration of the PISA. While a statistically insignificant change in achievement across 10 to 15 years may not be considered a positive result, the long-term trend presents a different picture of achievement than the short-term trend. Examining trends allow researchers and policymakers to identify policies and practices that were implemented at a certain time that may have contributed to an observed trend.

In general, reports from a single assessment that claim U.S. student achievement has stagnated, increased, or decreased must be interpreted with caution. When one result is reported in isolation, it is easy to make oversimplified conclusions that do not necessarily generalize across assessments and over time.72

Socioeconomic Considerations Across Countries

International assessments results are based on a representative sample of students. There are considerable differences in the characteristics of students within certain countries, however, and an accurate representative sample would also reflect these differences. Some of the differences in populations across countries may have considerable implications in the interpretation of assessment results. For example, one difference that has been found to have implications for the interpretation of assessment results is the range of socioeconomic inequality. The United States has a broader income distribution than many of the countries that participate in international assessments. The sample from the United States, therefore, likely has a larger number of students from lower-income families than samples from countries with more concentrated income distributions.

Some researchers argue that "social class inequality," which is largely determined by income, is a major factor in the interpretation of international assessment results.73 These researchers found that students from lower-income families perform worse than students from higher-income families in every country in their analysis. Since there are more lower-income families in the United States than in the some of the countries it is routinely compared to, researchers argue that the relative performance of U.S. students is actually better than it appears when simply comparing countries' national averages.

In an analysis of 2009 PISA results, researchers found that if U.S. students had an income distribution similar to that of other countries in the analysis, the average reading scores would be higher than those of the other countries and the average math scores would be about the same.74 Furthermore, these researchers suggest that examining trends for students at varying income distributions over time would be more useful than examining average scores over time.

Comparing Results Across Assessments

Since U.S. students participate in national assessments and several international assessments, there is a natural inclination to want to compare results from one assessment to another, especially when results are released within a short timeframe. The most frequently administered assessments for U.S. students are annual statewide assessments and biennial NAEP assessments. It often appears as if there is overlap in the content, timing, and grade-levels assessed, so it begs the question: can NAEP be compared to the results of statewide assessment systems required by the ESEA?

Although U.S. students participate in international assessments less frequently, there is also apparent overlap in the content, timing, and grade-levels assessed. This leads to questions such as, can NAEP be compared to international assessments? If NAEP and TIMSS both measure 8th grade mathematics, are those results comparable? If NAEP and PIRLS both measure 4th grade reading, are those results comparable?

The answers to these questions largely depend on the alignment between assessments and the purpose of the comparison. The following section of the report discusses some of the alignment studies that have been conducted and the usefulness of making comparisons across large-scale assessments.

NAEP and Statewide Assessments Comparisons

Both NAEP and statewide assessments measure 4th and 8th grade achievement in reading and mathematics. They both report scaled scores and performance levels of students in these content areas. These similarities may lead some to question whether these assessments are comparable or even redundant. While national and state assessments may appear to have significant similarities, each was designed for a different purpose and by different stakeholders. There are three main issues to contemplate when considering making a comparison: the alignment of content standards, the scale, and the definition of performance standards.

NAEP and statewide assessments overlap in the sense that both assessment programs measure mathematics and reading achievement. The assessment programs, however, use different frameworks to decide what mathematics and reading content will be measured. For NAEP, the NAGB determines what students know and should be able to do in various content areas based on the knowledge and experience of various stakeholders, such as content area experts, school administrators, policymakers, teachers, and parents. The content assessed by NAEP is not aligned to any particular content standards.75 The specific content measured by statewide assessments, however, is aligned with the state's content standards. Each state has a different process for determining its content standards for mathematics and reading, but, like NAEP, it also includes input from multiple stakeholders.

While it may not be feasible to study the content alignment between NAEP and all states' content standards, there was a recent alignment studies between NAEP and the common core state standards (CCSS). The study used an expert panel to study the alignment of NAEP and CCSS in 4th and 8th grade mathematics. The study found 79% alignment for 4th grade students and 87% alignment for 8th grade students, concluding that alignment between NAEP and CCSS was "strong."76 Other investigations have examined the alignment of NAEP reading and writing frameworks and the CCSS English language arts standards, however, these examinations did not determine a degree of alignment.77 It is important to note that many states are not currently using the CCSS or are using a modified version of the CCSS. From the data presented here, it is not possible to determine how well the NAEP framework aligns with specific state content standards in mathematics and reading.

Even if a "strong" alignment between NAEP frameworks and state content standards is assumed, there are other difficult issues to consider when making comparisons between the assessment results. For example, NAEP and statewide assessments use different scales. NAEP scaled scores for reading and mathematics are reported on a scale from 0-500. Statewide assessments use a scale that is specific to the assessment used in each state. For the purpose of illustration, consider three common assessments that are in place across some states: the ACT Aspire, the Partnership for Assessment of Readiness for College and Careers (PARCC), and the Smarter Balanced Assessment Consortium (SBAC).78 The ACT Aspire uses a scale that typically reports achievement in the 400-500 range (grades 3-10).79 The PARCC scale scores range from 650 to 850 (grades 3-11).80 The SBAC scale scores range from 2114-2795 (grades 3-8, and 11th grade).81 Clearly, given these different scales, a scaled score cannot be compared across NAEP and a statewide assessment. Furthermore, improvement in scaled scores cannot be directly compared. If a group of students improves 20 points on the 4th grade NAEP reading assessment, it is not equivalent to a 20-point improvement on a statewide assessment, such as the PARCC or SBAC.

If scaled scores cannot be compared, what about performance standards? A performance standard is a generally agreed upon definition of a certain level of performance in a content area that is expressed in terms of a cut score (e.g., basic, proficient, advanced) for a given assessment. There are no generally agreed upon performance standards that apply to both NAEP and state assessments, so performance standards cannot be compared across assessments. For example, as discussed earlier, NAEP defines performance standards as basic, proficient, and advanced.82 By contrast, PARCC and SBAC use levels as performance standards. For example, "Level 4" (out of 5) on the PARCC corresponds to "met expectations." Although it seems similar, it is unlikely that "met expectations" on the PARCC represents the same level of achievement as "proficient" on the NAEP. Setting cut points for these levels requires a specific standard-setting process that is assessment-specific, so it is unlikely that meeting expectations on one assessment corresponds to the same level of performance as being proficient on another.

Perhaps even more difficult to reconcile may be when states and NAEP use the same performance standards terminology. For example, the state of Alaska uses four performance standards: Far Below Proficient, Below Proficient, Proficient, and Advanced. "Proficient" is defined as "meets the standards at a proficient level, demonstrating knowledge and skills of current grade-level content."83 Unlike NAEP, the "proficient" definition does not necessarily include application of skills to real-world situations or analytical skills. Neither definition of "proficient" is correct or incorrect, but these definitions demonstrate the difficulty in comparing "proficient" performance standards of NAEP to those of state assessments.

In an effort to examine how closely the performance standards of NAEP reflect those used in the states, NCES released an alignment study to map state performance standards onto the NAEP scale.84 This mapping study is not an evaluation of the quality of state performance standards or NAEP performance standards but rather is intended to give context to the discussion of comparing performance standards. The study found that most "proficient" state standards in 4th and 8th grade reading and mathematics mapped at the NAEP "basic" level. This finding reinforces the difficulty in comparing NAEP to statewide assessments. Since the "proficient" performance standard on many statewide assessments may be more comparable to the "basic" performance standard on NAEP, it may not be possible to make meaningful comparisons between state assessments and NAEP using performance standards. Given the difference in the meaning of "proficient" across assessments, the number of students "proficient" on NAEP will likely be lower than the number of students "proficient" on most state assessments. If fewer students score at the "proficient" performance standard on NAEP, it does not mean that either the NAEP or statewide assessment measured achievement correctly or incorrectly. Rather, the assessments used a different assessment framework and cut score to define the performance standard of "proficient."

NAEP and International Assessments

Both NAEP and international assessments measure reading and mathematics performance of students around 4th and 8th grade. For instance, NAEP, TIMSS, and PISA all measure mathematics performance for students around 8th grade. NAEP and PIRLS both measure 4th grade reading performance. NAEP and PISA both measure reading performance around 8th grade. Comparing NAEP to international assessments requires considering some of the same issues as comparing NAEP to statewide assessments systems: the scale, the definition of performance standards, and the alignment between the assessments. There are also some additional considerations, including the different target populations, participating education systems, differences in voluntary student participation, and the precision of measurement.