Educational Assessment and the Elementary and Secondary Education Act

The Elementary and Secondary Education Act (ESEA), as amended by the Every Student Succeeds Act (ESSA; P.L. 114-95), specifies the requirements for assessments that states must incorporate into their state accountability systems to receive funding under Title I-A. While many of the assessment requirements of the ESEA have not changed from the requirements put into place by the No Child Left Behind Act (NCLB; P.L. 107-110), the ESSA provides states some new flexibility in meeting them. This report has been prepared in response to congressional inquiries about the revised educational accountability requirements in the ESEA, enacted through the ESSA, and implications for state assessment systems that are used to meet these requirements. While these changes have the potential to add flexibility and nuance to state accountability systems, for these systems to function effectively the changes need to be implemented in such a way as to maintain the validity and reliability of the required assessments. To this end, the report also explores current issues related to assessment and accountability changes made by the ESSA.

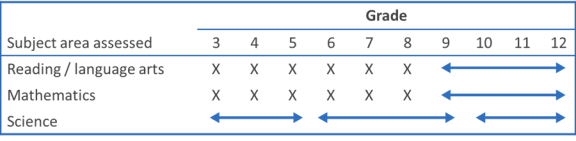

The ESEA continues to require that states implement high-quality academic assessments in reading, mathematics, and science. States must test all students in reading and mathematics annually in grades 3 through 8 and once in high school. States must also test all students in science at least once within three grade spans (grades 3-5, 6-9, and 10-12). Assessments in other grades and subject areas may be administered at the discretion of the state. All academic assessments must be aligned with state academic standards and provide “coherent and timely” information about an individual student’s attainment of state standards and whether the student is performing at grade level (e.g., proficient). The reading and mathematics assessment results must be used as indicators in a state’s accountability system to differentiate the performance of schools.

State accountability systems continue to be required to report on student proficiency on reading and mathematics assessments. However, a singular focus on student proficiency has been criticized for many reasons, most notably that proficiency may not be a valid measure of school quality or teacher effectiveness. It is at least partially a measure of factors outside of the school’s control (e.g., demographic characteristics, prior achievement), and may result in instruction being targeted toward students just below the proficient level, possibly at the expense of other students. In response, the ESSA provides the option for student achievement to be measured based on proficiency and student growth. While measures of student growth remain optional, prior to the enactment of the ESSA, states were only able to include measures of student growth in their accountability systems if they received a waiver from the U.S. Department of Education to do so.

The ESSA also authorizes two new assessment options to meet the requirements discussed above. First, in selecting a high school assessment for reading, mathematics, or science (grades 10-12), a local educational agency (LEA) may choose a “nationally-recognized high school academic assessment,” provided that it has been approved by the state. Second, the ESSA explicitly authorizes the use of “computer adaptive assessments” as state assessments. Previously, it was unclear whether computer adaptive assessments met the requirement that statewide assessments be the same assessments used to measure the achievement of all elementary and secondary students. Computer adaptive assessments adjust to a student’s individual responses, which means that all students will not see the exact same questions. The ESSA added language clarifying that students do not have to be offered the same assessment items on a computer adaptive assessment. The ESSA also authorizes an exception to state assessment requirements for 8th grade students taking advanced mathematics in middle school that permits them to take an end-of-course assessment rather than the 8th grade mathematics assessment, provided certain conditions are met.

The ESSA added specific provisions related to the assessment of “students with the most significant cognitive disabilities” that were previously addressed only in regulations. It made changes in how English learners (ELs) have their assessment results included in states’ accountability systems as well.

Additionally, the ESEA as amended through the ESSA now requires LEAs to notify parents of their right to receive information about assessment opt-out policies in the state. If excessive numbers of students opt out of state assessments, however, it may undermine the validity of a state’s accountability system.

States continue to be required to administer 17 assessments annually to meet the requirements of Title I-A. These requirements have been implemented within a crowded landscape of state, local, and classroom uses of educational assessments, raising concerns about over-testing of students. The ESSA added three new provisions related to testing burden: (1) each state may set a target limit on the amount of time devoted to the administration of assessments; (2) LEAs are required to provide information on the assessments used, including the amount of time students will spend taking them, and (3) the Secretary of Education may reserve funds from the State Assessment Grant program for state and LEA assessment audits.

Educational Assessment and the Elementary and Secondary Education Act

Jump to Main Text of Report

Contents

- Overview

- Assessments in Elementary and Secondary Schools Under the ESEA

- State Assessments

- General Requirements

- State Assessment Grants

- State Options for Meeting Assessment Requirements

- Brief History of State Assessments

- Past Implementation of State Assessment Systems

- Current Implementation of State Assessment Systems

- Assessment and Accountability Issues Receiving Attention

- Assessment of Student Proficiency and Growth

- Assessments for Students with Special Needs

- Students with Disabilities

- English Learners

- Computer Adaptive Assessment

- Student Opt-Out Practices

- Testing Burden

Summary

The Elementary and Secondary Education Act (ESEA), as amended by the Every Student Succeeds Act (ESSA; P.L. 114-95), specifies the requirements for assessments that states must incorporate into their state accountability systems to receive funding under Title I-A. While many of the assessment requirements of the ESEA have not changed from the requirements put into place by the No Child Left Behind Act (NCLB; P.L. 107-110), the ESSA provides states some new flexibility in meeting them. This report has been prepared in response to congressional inquiries about the revised educational accountability requirements in the ESEA, enacted through the ESSA, and implications for state assessment systems that are used to meet these requirements. While these changes have the potential to add flexibility and nuance to state accountability systems, for these systems to function effectively the changes need to be implemented in such a way as to maintain the validity and reliability of the required assessments. To this end, the report also explores current issues related to assessment and accountability changes made by the ESSA.

The ESEA continues to require that states implement high-quality academic assessments in reading, mathematics, and science. States must test all students in reading and mathematics annually in grades 3 through 8 and once in high school. States must also test all students in science at least once within three grade spans (grades 3-5, 6-9, and 10-12). Assessments in other grades and subject areas may be administered at the discretion of the state. All academic assessments must be aligned with state academic standards and provide "coherent and timely" information about an individual student's attainment of state standards and whether the student is performing at grade level (e.g., proficient). The reading and mathematics assessment results must be used as indicators in a state's accountability system to differentiate the performance of schools.

State accountability systems continue to be required to report on student proficiency on reading and mathematics assessments. However, a singular focus on student proficiency has been criticized for many reasons, most notably that proficiency may not be a valid measure of school quality or teacher effectiveness. It is at least partially a measure of factors outside of the school's control (e.g., demographic characteristics, prior achievement), and may result in instruction being targeted toward students just below the proficient level, possibly at the expense of other students. In response, the ESSA provides the option for student achievement to be measured based on proficiency and student growth. While measures of student growth remain optional, prior to the enactment of the ESSA, states were only able to include measures of student growth in their accountability systems if they received a waiver from the U.S. Department of Education to do so.

The ESSA also authorizes two new assessment options to meet the requirements discussed above. First, in selecting a high school assessment for reading, mathematics, or science (grades 10-12), a local educational agency (LEA) may choose a "nationally-recognized high school academic assessment," provided that it has been approved by the state. Second, the ESSA explicitly authorizes the use of "computer adaptive assessments" as state assessments. Previously, it was unclear whether computer adaptive assessments met the requirement that statewide assessments be the same assessments used to measure the achievement of all elementary and secondary students. Computer adaptive assessments adjust to a student's individual responses, which means that all students will not see the exact same questions. The ESSA added language clarifying that students do not have to be offered the same assessment items on a computer adaptive assessment. The ESSA also authorizes an exception to state assessment requirements for 8th grade students taking advanced mathematics in middle school that permits them to take an end-of-course assessment rather than the 8th grade mathematics assessment, provided certain conditions are met.

The ESSA added specific provisions related to the assessment of "students with the most significant cognitive disabilities" that were previously addressed only in regulations. It made changes in how English learners (ELs) have their assessment results included in states' accountability systems as well.

Additionally, the ESEA as amended through the ESSA now requires LEAs to notify parents of their right to receive information about assessment opt-out policies in the state. If excessive numbers of students opt out of state assessments, however, it may undermine the validity of a state's accountability system.

States continue to be required to administer 17 assessments annually to meet the requirements of Title I-A. These requirements have been implemented within a crowded landscape of state, local, and classroom uses of educational assessments, raising concerns about over-testing of students. The ESSA added three new provisions related to testing burden: (1) each state may set a target limit on the amount of time devoted to the administration of assessments; (2) LEAs are required to provide information on the assessments used, including the amount of time students will spend taking them, and (3) the Secretary of Education may reserve funds from the State Assessment Grant program for state and LEA assessment audits.

Overview

The Elementary and Secondary Education Act (ESEA), as amended by the Every Student Succeeds Act (ESSA; P.L. 114-95), specifies the requirements for the state assessments that states must incorporate into their state accountability systems to receive funding under Title I-A. Title I-A of the ESEA authorizes aid to local educational agencies (LEAs) for the education of disadvantaged children. Title I-A grants provide supplementary educational and related services to low-achieving and other students attending elementary and secondary schools with relatively high concentrations of students from low-income families. As a condition of receiving Title I-A funds, states, LEAs, and public schools must comply with numerous requirements related to standards, assessments, and academic accountability systems. All states currently accept Title I-A funds. For FY2017, the program was funded at $15.5 billion.

The ESEA requires that states implement high-quality academic assessments in reading, mathematics, and science. States must test all students in reading and mathematics annually in grades 3 through 8 and once in high school. States must also test all students in science at least once within three grade spans (grades 3-5, 6-9, and 10-12). The ESEA therefore requires states to implement annually, at a minimum, 17 assessments for students in elementary and secondary schools.1

While many of the assessment requirements of the ESEA have not changed from the requirements put into place by the No Child Left Behind Act (NCLB; P.L. 107-110), the ESSA provides states some new flexibility in meeting them. For example, states may choose to administer one summative assessment or multiple statewide interim assessments that result in a single summative score. For the first time, the ESSA allows states to use "nationally recognized tests" to meet the high school assessment requirement, provided there is evidence the tests align with state standards. It also includes provisions for states' use of alternate assessments for students with the "most significant cognitive disabilities," which was previously addressed only in regulations.2

The ESSA explicitly authorizes the use of computer adaptive assessments. Computer adaptive assessments adjust to a student's individual responses, which means that all students will not see the exact same questions. The ESSA also authorizes an exception to state assessment requirements for 8th grade students taking advanced mathematics in middle school that permits them to take an end-of-course assessment rather than the 8th grade mathematics assessment, provided certain conditions are met. In addition, the ESSA authorizes a new demonstration authority for states to create an "innovative assessment system" that would permit them to use different assessment formats (e.g., competency-based assessments) 3 and assessments that validate when students are ready to demonstrate mastery or proficiency, and allow for differentiated student support based on individual learning needs. The ESSA also includes a new provision that permits the Secretary of Education (the Secretary) to reserve a portion of funds available under the State Assessment Grant program to award competitive grants to states for assessment audits.

The purpose of this report is to describe the general assessment requirements of the ESEA as amended and discuss the new flexibility states have in meeting these requirements. The report also discusses some issues related to the changes enacted by ESSA regarding the use of assessments in accountability systems that are receiving attention as they are implemented. More specifically, this report examines issues related to assessment of student growth, assessment of students with disabilities and English learners, the use of computer adaptive assessments, student assessment participation requirements, and testing burden. While these changes have the potential to add flexibility and nuance to state accountability systems, states face the challenge of implementing them in such a way as to maintain the validity and reliability of the required assessments.

Assessments in Elementary and Secondary Schools Under the ESEA

Students participate in many assessments in elementary and secondary schools. As mentioned above, the ESEA requires states to administer annually, at a minimum, 17 assessments collectively in reading, mathematics, and science.4 To meet this requirement, states typically use summative assessments.5 For reading and mathematics, the results of these assessments are then used in the state accountability system to differentiate schools based, in part, on student performance. In addition to these assessments, states, LEAs, and schools administer a number of other assessments for different purposes and within different content areas.6 The focus of this report is on the assessments required in Title I-A of the ESEA.

State Assessments

Title I-A of the ESEA outlines the requirements for state assessment and accountability systems. Section 1111(b) specifically details the "academic assessments" requirements.7 While many of the requirements for academic assessments have not changed from the requirements put in place by the NCLB, a few changes are noteworthy. This section discusses the general state academic assessment requirements and highlights some of the changes made by the ESSA. It also discusses the State Assessment Grants program, including how funds are allocated to states. The section closes with a discussion of the Secretary's new ability to reserve funds under the State Assessment Grants program to make competitive grants to states for conducting assessment audits, and a new authority included in the ESSA related to the use of innovative assessments.

General Requirements

This section of the report examines current ESEA assessment requirements with respect to the content areas and grades assessed, student participation, properties of the assessments, new assessment options, and public information about assessments. In each case, the discussion specifies whether current requirements existed prior to the implementation of the ESSA or whether they were added by the ESSA.

Content Areas and Grades Assessed

Under the requirements of the ESEA both prior to and following the enactment of the ESSA, each state must implement a set of high-quality academic assessments in reading, mathematics, and science. Reading and mathematics assessments must be administered annually in grades 3 through 8 and once in high school. Science assessments must be administered at least once within three grade spans (grades 3-5, 6-8, and 9-12). Assessments in other grades and subject areas may be administered at the discretion of the state. All academic assessments must be aligned with state academic standards and provide "coherent and timely" information about an individual student's attainment of state standards and whether the student is performing at grade level. The reading and mathematics assessment results must be used in the state's accountability system to differentiate the performance of schools.

|

Figure 1. Subject Areas and Grades That Must Be Assessed Annually Under Title I-A of the ESEA |

|

|

Source: Figure prepared by the Congressional Research Service. |

Student Participation in Assessments

For the most part, under the ESEA, the same academic assessments must be used to measure the achievement of all public elementary and secondary school students in a state.8 States are required to measure annually the achievement of not less than 95% of all students and 95% of all students in each subgroup9 on the required assessments.10 A state must explain how this requirement will be incorporated into its accountability system.

As mentioned above, academic assessments must be the same academic assessments used to measure the achievement of all students, which includes most students with disabilities and English learners (ELs). Students with the "most significant cognitive disabilities" may participate in an alternate assessment aligned with alternate achievement standards. For ELs, under certain circumstances, assessments may be administered in the language and form that is most likely to produce accurate results.

The ESSA added two new provisions related to a parent's right to opt a child out of the required assessments. First, it added a provision that provides that nothing "shall be construed as preempting a state or local law regarding the decision of a parent to not have the parent's child participate in the academic assessments."11 Second, the ESSA now requires any LEA receiving Title I-A funds to notify parents of their right to receive information about assessment participation requirements, which must include a policy, procedure, or parental right to opt the child out of state assessments.12

Properties of the Assessment

State assessments must meet several requirements regarding (1) format, (2) administration, and (3) technical quality. While a required assessment format is not specified, the ESEA continues to require that the assessment measures higher-order thinking skills and understanding. It also adds some examples of how this requirement may be met, which may include measures of student growth and "may be partially delivered in the form of" portfolios, projects, or extended performance tasks.

The ESSA provides some new flexibility regarding the administration of state assessments. Prior to its enactment, states were required to administer a single, summative assessment to meet the requirements of Title I-A. Under the ESEA as amended by the ESSA, states have the option of using a single, summative assessment or multiple statewide interim assessments that result in a single summative score. The ESSA also includes a new provision that allows each state to set a target limit on the amount of time devoted to the administration of assessments for each grade, expressed as a percentage of annual instructional hours.13

States must continue to provide evidence that academic assessments are of adequate technical quality for each purpose required under the ESEA. Prior to the enactment of the ESSA, states were required to provide this evidence to the Secretary and make it public upon the Secretary's request. Under the ESEA as amended by the ESSA, states are now required to include such evidence on the state education agency's (SEA's) website. As required prior to the enactment of the ESSA, state assessments must be used for purposes for which they are valid and reliable and must be consistent with nationally recognized professional and technical standards.14 They must also "objectively measure academic achievement, knowledge, and skills." To increase the likelihood of valid and reliable results, the ESSA continues to require that states use "multiple up-to-date measures" of academic achievement.15

New Assessment Options

The ESSA explicitly authorizes two new assessment options to meet the requirements discussed above. First, in selecting a high school assessment for reading, mathematics, or science, an LEA may choose a "nationally-recognized high school academic assessment," provided that it has been approved by the state.16 To receive state approval, the nationally recognized high school assessment must (1) be aligned to the state's academic content standards; (2) provide comparable, valid, and reliable data as compared to the state-designed assessments for all students and each student subgroup; (3) meet all general requirements for state assessments, including technical quality; and (4) provide for unbiased, rational, and consistent differentiation between schools in the state.

Second, the ESSA explicitly authorizes the use of "computer adaptive assessments" as state assessments. Previously, it was unclear whether computer adaptive assessments met the requirement that statewide assessments be the same assessments used to measure the achievement of all elementary and secondary students. Computer adaptive assessments adjust to a student's individual responses, which means that all students will not see the exact same questions. The ESSA added language clarifying that students do not have to be offered the same assessment items on a computer adaptive assessment. Computer adaptive assessments, however, must meet one explicit requirement beyond the general requirements of state assessments.17 The computer adaptive assessment must, at a minimum, measure each student's academic proficiency in state academic standards for the student's grade level and growth toward such standards.18 Once the assessment has measured the student's proficiency at grade level, it "may" measure the student's level of academic proficiency above or below the student's grade level.

The ESSA also authorized an exception to state assessment requirements for 8th grade students taking advanced mathematics in middle school. These students are now permitted to take an end-of-course assessment rather than the 8th grade mathematics assessment, provided certain conditions are met. For example, a student benefitting from this exception must take a mathematics assessment in high school that is more advanced than the assessment taken at the end of 8th grade.

Public Information about Assessments

The ESSA added specific requirements that each LEA receiving funds under Title I-A must provide information to the public regarding state assessments. More specifically, for each grade served by the LEA, it must provide information on (1) the subject matter being assessed, (2) the purpose for which the assessment is designed and used, (3) the source of the requirement of the assessment, (4) the amount of time students will spend taking assessments, (5) the schedule of assessments, and (6) the format for reporting results of assessments (if such information is available).19

Consequences Associated with Assessments

While this report focuses on the assessments required by the ESEA, the assessments are used in a state accountability system that determines which schools will be identified for support and improvement based on their performance. Prior to the implementation of the ESSA, states were required to determine whether schools and LEAs were making adequate yearly progress (AYP) based on the percentage of all students and the percentage of students by subgroup who (1) scored at the proficient level or higher on the mathematics and reading assessments, (2) met the requirements of an additional academic indicator, which had to be a graduation rate for high school students, and (3) met an assessment participation rate. The failure of the all students group or any subgroup to meet any of these requirements for two consecutive years or more triggered a set of outcome accountability consequences that required schools and LEAs to take actions specified in statutory language. As states were required to establish goals for proficiency on the mathematics and reading assessments based on 100% of students reaching the proficient level by the end of the 2013-2014 school year, as that deadline drew closer, more schools and LEAs failed to meet at least one of the requirements to make AYP.20 Thus, under the accountability system prior to the enactment of the ESSA, it was possible that based on student assessment results, all or most LEAs and schools in a state could fail to make AYP and be required to implement various improvement requirements. This system of making outcome accountability decisions based on assessment results was considered a high-stakes assessment system, as poor performance on either the mathematics or reading assessment by the all students group or any individual subgroup could trigger consequences.

Under the ESEA following the enactment of the ESSA, changes have been made to the accountability system that could lessen the high-stakes association between student assessment results and the determination of whether a school is identified for improvement, depending on decisions made by the state in designing its accountability system. Under the ESSA, student proficiency on the mathematics and reading assessments is still considered in the state accountability system along with several other indicators.21 Based on these indicators, the SEA must annually establish a system for "meaningfully differentiating" all public schools.22 The system must also identify any public school in which any subgroup of students is "consistently underperforming," as determined by the state.23 The results of this process are used to help determine which public schools need additional support to improve student achievement.

Based on this system of meaningful differentiation, an SEA informs LEAs in its state which low-achieving schools within the LEA require comprehensive support and improvement. More specifically, SEAs are required to identify for comprehensive support and improvement: (1) at least the lowest-performing 5% of all schools receiving Title I-A funds, (2) all public high schools failing to graduate 67% or more of their students, (3) schools required to implement additional targeted support that have not improved in a state-determined number of years,24 and (iv) additional statewide categories of schools, at the state's discretion.25 States must also inform LEAs whether there are subgroups of "consistently underperforming" students within a school that have been identified for targeted support and improvement.26 Depending on how a state chooses to identify schools for comprehensive or targeted support and improvement, the number or percentage of schools in a given state that may be subject to outcome accountability requirements may be substantially lower than the number or percentage of schools that were subject to outcome accountability requirements prior to the enactment of the ESSA.

Thus, as a result of the changes made by the ESSA, the high-stakes emphasis placed on assessment in the accountability system may be diminished relative to the emphasis place on assessment prior to the enactment of the ESSA. No longer does poor overall student performance or subgroup performance on an assessment have to mean that a school is automatically identified as being in need of improvement. States determine how much weight to put on assessment results in the accountability system and the extent to which schools will be identified for comprehensive or targeted support and improvement as a result of their performance on all of the indicators included in the state accountability system.

State Assessment Grants

The ESEA continues to authorize funding for State Assessment Grants.27 Two funding mechanisms continue to be authorized: (1) formula grants to states for the development and administration of the state assessments required under Title I-A, and (2) competitive grants to states to carry out related activities beyond the minimum assessment requirements. The allocation of funds depends on a "trigger amount" within the legislation. For annual appropriations at or below the trigger amount, the entire appropriation is used to award formula grants to states. For an annual appropriation above the trigger amount, the difference between the appropriation and trigger amount is used to award competitive grants to states.28

For amounts equal to or less than the trigger amount, the Secretary must reserve 0.5% of the appropriation for the Bureau of Indian Education and 0.5% of the appropriation for the Outlying Areas prior to awarding formula grants to states. The Secretary has the option of reserving 20% of the funds appropriated to make grants for assessment system audits. After making these reservations, the Secretary then provides each state with a minimum grant of $3 million. Any remaining funds are subsequently allocated to states in proportion to their number of students ages 5 to 17.

Any funds appropriated in excess of the trigger amount are awarded by the Secretary to states and consortia of states through a competitive grant process. The funds are then used for assessment activities.29

Assessment System Audit

The ESEA as amended by the ESSA permits the Secretary to reserve funds from the formula grant portion of the State Assessment Grant program to make grants to states to conduct an assessment system audit. As previously noted, the Secretary may reserve up to 20% of the funds appropriated for state assessment formula grants30 for the purpose of conducting these audits. From the funds reserved, the Secretary makes annual grants to states of not less than $1.5 million to conduct a state assessment system audit and to provide subgrants to LEAs to conduct assessment audits at the LEA level.31 To receive a grant under this section, a state must submit an application to the Secretary detailing the assessment system audit, planned stakeholder participation and feedback, and subgrants to LEAs. Each state must ensure that LEAs conduct an audit of local assessments. Following the audit, each state is required to develop a plan to improve and streamline the state assessment system by eliminating unnecessary assessments, supporting the use of best practices from LEAs in other areas of the state, and supporting LEAs in streamlining local assessment systems. The state is required to report the results of the state and each LEA audit in a format that is publicly available.

Innovative Assessment and Accountability Demonstration Authority

The ESEA as amended by the ESSA includes a new demonstration authority for the development and use of an "innovative assessment system." States or consortia of states may apply for the demonstration authority to develop an innovative assessment system that "may include competency-based assessments, instructionally embedded assessments, interim assessments, cumulative year-end assessments, or performance based assessments that combine into an annual summative determination for each student" and "assessments that validate when students are ready to demonstrate mastery or proficiency and allow for differentiated student support based on individual learning needs."32 A maximum of seven SEAs, including not more than four states participating in consortia, may receive this authority. Separate funding is not provided under the demonstration authority; however, states may use formula and competitive grant funding provided through the State Assessment Grant program discussed above to carry out this demonstration authority.33

States and consortia may apply for an "initial demonstration period" of three years to develop innovative assessment systems and implement them in a subset of LEAs. If the initial demonstration period is successful, states and consortia may apply for a two-year extension in order to transition the innovative assessment system into statewide use by the end of the extension period. If the SEA meets all relevant requirements and successfully scales the innovative assessment system for statewide use, the state may continue to operate the innovative assessment system.

In general, applications for innovative assessment systems must demonstrate that the innovative assessments meet all the general requirements of state assessments discussed above.34 The only explicit differences between state assessment systems and innovative assessment systems are the format of assessment (i.e., competency-based assessments, instructionally embedded assessments, interim assessments, cumulative year-end assessments, and performance-based assessments) and that the reporting of results can be expressed in terms of "student competencies" aligned with the state's achievement standards.

State Options for Meeting Assessment Requirements

Both prior to and following the enactment of the ESSA, states have had the authority to select their own assessments and the academic content and performance standards to which the assessments must be aligned. For reading and mathematics assessments administered in grades 3-8, states use state-specific assessments, common assessments used by multiple states, or a combination of the two. For these subjects at the high school level, states use state-specific assessments, common assessments, and end-of-course assessments administered to all students. For science assessments, states can continue to use (1) state-specific assessments, (2) end-of-course exams for courses like biology or chemistry, or (3) a combination of the state-specific assessments and end-of-course exams. The ESSA added a new option for high school assessments, allowing states to use a "nationally recognized" high school academic assessment.35 While the state assessment landscape is constantly changing, examples of how states are currently meeting the ESEA assessment requirements are provided in the discussion that follows.

Brief History of State Assessments

When the NCLB was enacted in 2002, it expanded the number and role of state assessments required by the ESEA.36 Under the NCLB, each state developed and administered state-specific assessments aligned with state-specific academic content and performance standards. This resulted in each state having its own set of academic assessments, academic content standards, and academic performance standards denoting various levels of proficiency. Because each state had its own system, there was no straightforward way to compare student performance across states.

In 2009, due in part to this lack of standardization, there was a grassroots effort led by the National Governors Association (NGA) and the Council of Chief State School Officers (CCSSO) to develop a common set of standards for reading and mathematics, known as the Common Core State Standards Initiative (CCSSI).37 This effort intended to create a set of college- and career-readiness standards that express what students should know and be able to do by the time they graduate from high school.38 Having common content and performance standards and aligned assessments could be used to facilitate comparisons among states using the same standards and assessments. Adoption of these standards by states is optional.

While the federal government had no role in developing the standards, the Obama Administration expressed support for the standards and took three major steps to incentivize their adoption and implementation: (1) Race to the Top (RTT) State Grants, (2) RTT Comprehensive Assessment Systems (CAS) Grants, and (3) the ESEA flexibility package. Under the RTT State Grants program, participating states were required to "develop and implement common, high-quality assessments aligned with common college- and career-ready K-12 standards."39

To support the development of the required assessments included in the RTT State Grants program, the Department of Education (ED) created the RTT CAS Grant program and provided funds to two consortia of states for "the development of new assessment systems that measure student knowledge and skills against a common set of college- and career-ready standards."40 Both winning consortia, the Partnership for Assessment of Readiness for College and Careers (PARCC) and the Smarter Balanced Assessment Consortium (SBAC), proposed to use the Common Core State Standards as the common standards to which their assessments would be aligned. At the time the grants were awarded, 25 states and the District of Columbia were part of the PARCC coalition and 31 states were part of the SBAC coalition.41

In 2011 the Obama Administration announced the availability of an ESEA flexibility package that allowed states to receive waivers of various ESEA provisions if they adhered to certain principles. One of these principles was having college- and career-ready expectations for all students, including adopting college- and career ready standards in reading and mathematics and aligned assessments. While it is not possible to know how many states may have ultimately adopted the Common Core State Standards or the assessments developed by PARCC or SBAC without the aforementioned federal incentives, initial interest and participation in the common assessment administration was high, followed by declining participation in later years.42

In addition to supporting the use of common standards, both the George W. Bush and Obama Administrations also supported measuring student achievement based on growth rather than proficiency only. ED encouraged states to incorporate measures of student growth into their state accountability systems through two sets of waivers. The first set was announced under the George W. Bush Administration in 2005. The growth model pilot waiver allowed states to add measures of student growth to their accountability systems to make determinations of adequate yearly progress (AYP).43 The Obama Administration subsequently required that states' assessments measure student growth in at least grades 3-8 and once in high school in order to receive waivers of various ESEA requirements.44

Past Implementation of State Assessment Systems

Two organizations, the Education Commission of the States (ECS) and Education Week, collect data on the types of assessments being used by states to meet the requirements of Title I-A. ECS collects information from state departments of education and tracks state assessment administration. The most recent information collection was published in January 2017.45 Education Week conducts an annual survey of current state assessment administration. The most recent information was published in February 2017.46 Thus, both surveys reflect the state of assessment prior to states submitting their state plan under the provisions of the recently amended ESEA.

Based on the data collected by these organizations, about half of all states reported using only state-specific assessments and end-of-course assessments in school year 2015-2016.47 Some states reported using a combination of common assessments, state-specific assessments, end-of-course exams, and nationally recognized high school academic assessments. For example, Michigan used a combination of state-specific assessment items and SBAC assessment items. Louisiana and Massachusetts state-level officials have approved a similar, blended approach. In these three examples of blended approaches, Michigan used the PSAT as a high-school assessment, Massachusetts used a state-specific assessment, and Louisiana used end-of-course assessments. Other states relied entirely on common assessments in the 2015-2016 school year.48 Seven states reported using only SBAC assessments,49 and four states reported using only PARCC.50

Current Implementation of State Assessment Systems

The current status of state assessment systems is in flux as states have been submitting their ESEA consolidated state plans to receive funding under a number of ESEA formula grant programs, including Title I-A, in 2017. Each state's plan must be approved by the Secretary. All 50 states, the District of Columbia, and Puerto Rico were required to submit ESEA state plans to ED by one of two deadlines (April 3, 2017, or September 18, 2017) to continue to receive funding under various ESEA formula grant programs, including Title I-A. ED maintains a website of state plans submitted for approval under the ESEA.51 Within the state plans, each state details the state assessments it will use to meet the Title I-A requirements.52 Based on information available from state plans that were approved by October 16, 2017, states have indicated that they expect to use combinations of assessments, such as SBAC, end-of-course assessments, and PSAT/SAT (e.g., Delaware and Nevada), or to rely primarily on state-specific annual assessments (e.g., New Jersey and New Mexico).

Assessment and Accountability Issues Receiving Attention

Several of the changes included in the ESEA as amended by the ESSA provide states with more flexibility with respect to using assessment for accountability purposes. Many of the changes have the potential of adding nuance to state accountability systems. For state accountability systems to function effectively, however, the changes to the requirements of Title I-A must be implemented in such a way as to retain the validity and reliability of state assessments and not to detract from the value or accuracy of state accountability systems. It remains important to understand how the measurement of student achievement with assessments affects determinations made by the state accountability systems.

This section of the report includes a discussion of several assessment-related issues that have garnered attention in relation to ESSA-enacted changes. These include issues related to assessment of student growth, assessments for students with special needs, computer adaptive assessments, student opt-out practices, and testing burden. The discussion identifies ways in which ESSA-enacted adjustments are intended to enhance the use of assessments in accountability systems and/or facilitate the ease of operating such systems. Some topics related to valid and reliable uses of assessment for purposes of accountability which may be relevant to these adjustments are discussed as well. These topics are ones that have surfaced in the reauthorization discourse or in the years in which NCLB provisions were in effect. They are presented not to forecast problems, but rather to explain some challenges that may have to be navigated and to identify some inherent tensions in using assessments in accountability systems. These issues may bear monitoring as ESSA-enacted adjustments are deployed.

Assessment of Student Proficiency and Growth

Prior to the enactment of the ESSA, state accountability systems were only required to report student proficiency, a static measure of student achievement. A singular focus on proficiency has been criticized for many reasons, most notably that proficiency may not be a valid measure of school quality or teacher effectiveness. Often, proficiency is a measure of factors outside of the school's control (e.g., demographic characteristics, prior achievement).53 One of the unintended consequences of static measures, like proficiency level, was the way instruction may have been targeted toward students just below the proficient level, possibly at the expense of other students.54 In an effort to raise the percentage of proficient students, schools and teachers may have targeted instructional time and resources toward those students who were near the proficiency bar. Because time and resources are limited, fewer instructional resources may have been available for students who were far below proficiency or those who were far above proficiency.

Under the ESEA as amended by the ESSA, states, LEAs, and schools are still required to report data on student proficiency on the required assessments. Some education policy groups have argued for a different way to report static achievement than has been used in the past.55 They have asserted that by continuing to focus only on proficiency, more emphasis will continue to be placed on students just below the level of proficiency. Some education policy groups have advocated instead for the use of a "performance index" for static measures.56 Using a performance index, states can get credit for reaching various levels of achievement (e.g., 0.75 points for partial proficiency, 1 point for reaching proficient, 1.25 points for reaching advanced levels). By using a performance index, some say, schools would have an incentive to try to increase the achievement of all students. Another way to report static achievement in a way that represents all students would be to report average scale scores. Scale scores are much more sensitive to changes in achievement than proficiency levels. They also have better statistical properties for research and analysis.57 Scale scores exist along a common scale so that they can be compared across students, subgroups, schools, and so forth. By placing assessment results along a common scale, changes over time can be determined more accurately.58

To address some of the concerns raised about relying only on proficiency as a measure of student academic achievement on required assessments, the ESEA as amended by the ESSA specifically provides the option for student achievement to be measured using student growth as well.59 Prior to the enactment of the ESSA, some states had already incorporated specific measures of student growth into their accountability systems after obtaining a waiver from ED to do so.60 Under the ESEA as amended by the ESSA, additional states may choose to incorporate measures of student growth into their accountability systems, while other states may choose to revise their student growth measures.

The type of growth model selected for a state accountability system depends on many different factors beyond the type of assessment measure used. The selection of an assessment, however, can limit the choices states have in developing and implementing growth models. The remainder of this section discusses different types of growth models currently used by states and the assessment requirements of such models.

Measuring growth takes several forms. A review of 29 state accountability systems that use student growth identified three types of growth models:

- State-set targets of student growth (14 states): State-set targets measure the degree to which a student meets a set amount of growth or reaches a performance benchmark.

- Student growth percentiles (SGPs) (12 states): SGPs examine a student's academic achievement relative to academic peers who began at the same place. An SGP is a number from 1 to 99 that represents how much growth a student has made relative to his or her peers. An SGP of 85 would indicate that a student demonstrated more growth than 85% of peers.

- SAS EVAAS (3 states):61 This model measures value-added growth. "Value-added growth" refers to the additional positive effect that a certain factor has on student achievement above what is considered "expected" growth.62 This type of growth model follows students over time and provides projection reports on a student's future achievement.63

The CCSSO also released a paper that outlines many considerations in the development and implementation of growth models in ESEA accountability systems.64 In this analysis, five common growth models were identified. These models are briefly described below, including information on their assessment requirements:

- Gain scores: Gain scores are calculated by finding the difference between test scores. Using scale scores, the results of an earlier score (e.g., 3rd grade mathematics) is subtracted from the results of a later score (e.g., 4th grade mathematics). In terms of assessment, the use of gain scores requires a vertical scale that creates a common scale across grade levels.65

- Growth rates: Growth rates are calculated by using multiple results of student assessment and statistically fitting a "trend line" across the data points. In order to aggregate the data across multiple grade levels, the growth rate may require vertically scaled scores.

- Student growth percentiles: As mentioned above, SGPs examine academic achievement compared to a student's peers who start at the same achievement level. These scores are based on the percentage of academic peers that a student outscores. Scores are reported as percentiles (i.e., 1-99). Because SGPs compare growth to peers who participated in the same assessment at the same time, a vertically scaled assessment is not necessarily a requirement.

- Transition tables: Transition tables use growth in discrete "performance levels" (i.e., levels of proficiency, such as basic, proficient, and advanced). These calculations are less precise but can be aggregated across grade levels without using vertically scaled scores.

- Residual models: Residual models are also referred to as "value-added" models. This type of model can describe the effect of outside factors on student growth over time (e.g., teacher or school influence on student learning). Residual models compare the performance of a class, school, or LEA to the average expected change. These models can be aggregated across grades without a vertical scale; however, there are other significant data requirements, such as the ability to link student data to teachers, schools, and LEAs.

Some education policy groups have cautioned against using a type of growth model that measures "growth to proficiency." The "growth to proficiency" model measures whether students are on-track to meet a proficient or higher performance standard (e.g., proficient or advanced). Using this type of model, there would be no difference between a student who moved from "not proficient" to "proficient" and a student who moved from "not proficient" to "advanced." This type of growth model would create the same incentive to focus instruction on students near the proficiency score. Students far below or above the level of proficiency may not receive appropriate attention. If states choose a "growth to proficiency" model, however, there may be ways to weight certain types of growth. Similar to a performance index, growth from "not proficient" to "proficient" could be assigned a point value and other types of growth could be assigned lower or higher points, depending on the design of the system.

By measuring student growth, accountability systems are better suited to evaluate student learning over time. Measuring the amount of learning within a given school year may be a more valid measure of school accountability than proficiency, because a student's prior level of achievement can be taken into account. Under some growth models, schools would not be penalized for certain low achieving students, provided that the students are meeting growth targets set by the accountability system. This type of growth model may be a more valid measure of factors within the school's control (e.g., classroom practices, teacher, leadership, school climate, etc.). Measuring growth targets, however, is dependent on reliable assessments that are sensitive to student learning over time.

Assessments for Students with Special Needs

The ESEA requires that all students participate in annual academic assessments. There are, however, special provisions for how to include students with special needs, including students with disabilities and ELs. For example, a subset of students with disabilities may participate in an alternate assessment, but the use of alternate assessment in state accountability systems is limited. In addition, ELs may be included in state accountability systems in different ways depending on how long they have been in the United States and their level of English language proficiency. This section explores how various assessments for students with special needs are included in state accountability systems.

Students with Disabilities

As was required prior to the enactment of the ESSA, states must include all students with disabilities in the statewide assessment system. Furthermore, states must disaggregate assessment results for students with disabilities. The majority of students with disabilities participate in regular academic assessments with their peers. The ESEA, however, requires states to adhere to several assessment practices to ensure that students with disabilities fully participate in state assessment systems.

First, the ESEA as amended by the ESSA requires that state assessments "be developed, to the extent practicable, using the principles of universal design for learning" (UDL).66 UDL is an inclusive framework that can be used in the development of assessments and instructional materials. In general, UDL is based on three principles: (1) providing multiple means of representation, (2) providing multiple means of action and expression, and (3) providing multiple means of engagement. UDL can be used to reduce unnecessary cognitive burden in the assessment process. For example, UDL helps to limit the use of complex language (e.g., using "primary" vs. "most important," the use of figurative language) and visual clutter (e.g., too much information on a page, unusual font style).67

Second, the ESEA continues to require that states allow "appropriate accommodations" for students with disabilities.68 Accommodations are intended to increase the validity of the assessment of these students.69 For example, if a student has a learning disability in the area of reading, he or she may receive a "read aloud" accommodation on a test of mathematics ability. Because the assessment is measuring mathematics, the student's reading difficulty should not interfere with the measurement of his or her mathematics ability. Without an accommodation, the student's mathematics score may be lower than his or her true ability. If, on the other hand, the student is taking a test of reading comprehension, the "read aloud" accommodation may not be appropriate because it may inaccurately increase the student's reading score.

Third, the ESSA amended the ESEA to allow for the use of an alternate assessment aligned with alternate standards for students with the "most significant cognitive disabilities."70 While the Individuals with Disabilities Education Act (IDEA) includes provisions regarding the identification of students with disabilities,71 it does not mention students with the "most significant cognitive disabilities" as a specific disability category under the law. As such, states must develop guidelines to identify students as those with the "most significant cognitive disabilities." A student's Individualized Education Program team (IEP team)72 applies the state guidelines to determine whether the student will participate in the alternate assessment. Determinations are made on a case-by-case basis.73 If it is determined that a student may participate in an alternate assessment, his or her parents must be informed of the decision and how participation in an alternate assessment may affect the receipt of a regular high school diploma. Students who are found eligible to participate in the alternate assessment are often those identified with intellectual disabilities, autism, or multiple disabilities.74

A state may provide an alternate assessment aligned with alternate achievement standards provided that the total number of students assessed in each content area does not exceed 1% of all tested students in the state. The "1% cap" applies only at the state level; there is no LEA-level cap.75 However, any LEA that assesses more than 1% of the total number of students assessed in a content area using the alternate assessment must submit information to the SEA justifying the need to do so. The state is then required to provide "appropriate oversight, as determined by the State" of any such LEA. If a state exceeds the 1% cap, scores from the assessments over the cap are counted as nonproficient in the state accountability system.76

An alternate assessment based on alternate achievement standards is considered a more valid assessment of students with the most significant cognitive disabilities because the alternate achievement standards are better aligned with the academic content standards for students in this group. If students with the most significant disabilities participate in the general assessment, they may be assessed on the full range of grade-level standards, which may not reflect the content they are learning.

While the alternate assessment may be a more valid assessment of students with the most significant cognitive disabilities, there may be specific issues of reliability to consider. For example, many alternate assessments are given in a different format, such as portfolio assessments, rating scales, or item-based tests.77 These test formats are often scored against a rubric that reflects how well a student mastered the alternate achievement standard. Using this type of assessment scoring, issues of inter-scorer agreement may be particularly important to preserving the validity of the accountability system.78 If different scorers do not rate student performance consistently and with reliability, the validity of the achievement score may be questionable and an incorrect number of students with the most significant cognitive disabilities may be counted as "proficient" in the accountability system.

English Learners

States must include all ELs in the statewide assessment system and disaggregate results for these students. ELs participate in statewide assessment and accountability systems in different ways, depending on their level of language proficiency and number of years of schooling in the United States. There are two separate types of assessments for ELs: (1) assessments of English language proficiency (ELP) and (2) statewide assessments of reading, mathematics, and science that are required for all students.

As was required prior to the enactment of the ESSA, states must ensure that all LEAs provide an annual assessment of English language proficiency of all ELs. The assessment must be aligned to state English proficiency standards within the domains of speaking, listening, reading, and writing.79 For ELP assessment purposes, most states currently participate in the WIDA consortium,80 which serves linguistically diverse students. The consortium provides for the development and administration of ACCESS 2.0, which is currently the most commonly used test of English language proficiency.81 In 2017, the WIDA consortium changed the achievement standards for English language proficiency to reflect the language demands of college- and career-readiness standards. As a result, some states are experiencing declines in reported English language proficiency levels and have fewer students exiting the school support programs for ELs.82 This means that more EL students may be included in accountability determinations for the EL subgroup for longer periods of time.

As was also required prior to the enactment of the ESSA, states must generally provide for the inclusion of ELs in statewide assessments of reading, mathematics, and science.83 Similar to the provision for students with disabilities, the ESEA provides for "appropriate accommodations" for ELs and allows states to administer, "to the extent practicable," assessments in the language that is most likely to yield accurate results.

The ESEA continues to require a state to assess an EL in English once the student has attended school in the United States for three or more consecutive years. An LEA, however, may decide on a case-by-case basis to assess an EL in a different language for an additional two consecutive years if the student has not reached a level of English language proficiency that allows for participation in an English language assessment of reading.84 The assessment of an EL in a different language may yield more accurate results for some students.

As previously permitted prior to the enactment of the ESSA, a state may exclude an EL from one administration of the reading assessment if he or she has been enrolled in school in the United States for less than 12 months.85 The ESSA added a second option regarding the assessment of recently arrived EL students: a state may assess and report the performance of such a recently arrived EL on the statewide reading and mathematics assessments, but exclude that student's results for the purposes of the state's accountability system. If a state selects the latter option, it is required to include a measure of student growth on these assessments in the student's second year of enrollment and a measure of proficiency starting with the third year of enrollment for the purposes of accountability.86

The results of statewide academic assessments must be disaggregated for ELs. A state may now include the scores of formerly identified ELs in the EL subgroup for a period of four years after the student ceases to be identified an EL. That is, once an EL becomes proficient in English, his or her score may still be included in the "EL subgroup" for reading and mathematics for four years.87 Prior to the enactment of the ESSA, an EL that had attained proficiency in English had his or her score included in the EL subgroup for two years.88

Because the English language proficiency standards for many states' tests have changed, it may take longer for ELs to exit the subgroup. This change in the way EL proficiency is determined and the ability of a state to include the performance of former ELs in the EL subgroup for four years means that many ELs may remain in the EL subgroup for accountability purposes for a longer time. It is possible, therefore, that the performance of the EL subgroup improves because students presumably have higher levels of English language proficiency as time goes on. The ESSA has changed the population of the students who are included in the EL subgroup, which may increase the performance of the subgroup. Because the population of the subgroup has changed, there may be inconsistency in the performance of the EL subgroup across time as states transition from NCLB accountability systems to ESSA accountability systems.

Computer Adaptive Assessment

The ESEA as amended by the ESSA explicitly authorizes the use of computer adaptive assessments to meet the requirements under Title I-A.89 Prior to the enactment of the ESSA, it was unclear whether computer adaptive assessments were allowed due to the requirement that statewide assessments be "the same academic assessments used to measure the achievement of all public elementary school and secondary school students in the State."90 One property of computer adaptive assessments is that they adjust to a student's individual ability, which means that all students do not receive the same assessment items. The ESSA, therefore, added clarifying language that specifies that the requirement that all assessments be "the same" "shall not be interpreted to require that all students taking the computer adaptive assessment be administered the same assessment items."91

A computer adaptive assessment works by adjusting to a student's individual responses. If a student continues to answer test items correctly, the assessment administers more difficult items. The items will continue to get more difficult until the student reaches a "ceiling." A ceiling in assessment is reached when a student either (1) completes all of the most difficult assessment items (i.e., the ceiling of the assessment itself) or (2) answers a number of assessment items incorrectly (i.e., the ceiling of the student's ability). Because the assessment items are adaptive, a computer adaptive assessment can measure a student's ability above or below grade level.

The ESEA requires that computer adaptive assessments must measure, at a minimum, a student's academic proficiency based on state academic standards for the grade level of the student. After measuring a student's grade level proficiency, the assessment may also measure proficiency above and below the student's actual grade level. Computer adaptive assessments are also required to measure student growth. The ESEA authorizes their use for alternate assessments for students with the most significant cognitive disabilities and English language proficiency assessments for ELs.

Due to the adaptive nature of these assessments, there have been several areas of concern about using them for statewide academic assessments. First, because computer adaptive assessments generally require students to reach a ceiling, it is possible that the administration time may be longer than a traditional assessment for high-achieving students.92 However, some states that use computer adaptive assessments find that they are more time efficient than traditional tests.93

Another concern is the accurate measurement of special populations, such as students with disabilities and ELs. Special populations are more likely to have inconsistent knowledge within a content area. That is, they may not have mastered all the lower skills in a subject area, though they may have partial mastery of higher-level skills. If a student reaches the ceiling on the lower skills, he or she would not have the opportunity to demonstrate partial mastery of the higher-level skills.94

Some have also expressed concern that computer adaptive assessments may test a student's computer literacy skills instead of the content areas of reading and mathematics.95 If a student's computer literacy interferes with the measurement of reading and mathematics proficiency, the assessment result would not be a valid representation of what the student knows and can do.96

Other issues that have been raised related to computer adaptive assessments (as well as nonadaptive computer assessments) focus on technical, financial, and reporting issues. For example, a school using computer adaptive assessments has to have computers available to students, may have to purchase software, needs to have technical staff available to set up the assessments and troubleshoot problems that may arise during the assessment process, and may need to provide training to teachers on the use of these assessments.97 At the same time, depending on the nature of the test items, computer assessments can generally be scored more quickly than paper-and-pencil assessments, providing teachers with more immediate feedback on student performance.98

Student Opt-Out Practices

A key provision of statewide assessment systems is the requirement to assess all students. This requirement is enforced through the reporting of student assessment results in the accountability system. States are required to annually measure the achievement of at least 95% of all students and 95% of all students in each subgroup.99 When at least 95% of assessment results are reported within the accountability system, the conclusions based on these assessment results are more likely to be valid and reliable for differentiating schools based on academic achievement.

A school cannot solely decide whether students participate in academic assessments, as parents have rights concerning the participation of their children. There are two separate provisions that were enacted through the ESSA regarding parental rights in the assessment process. First, at the parent's request, the ESEA now requires the LEA to provide information on student participation in assessments and to include any policy, procedure, or parental right to opt the child out of the assessment.100 Second, the ESEA now explicitly states that nothing in the assessment requirements "shall be construed as preempting a State or local law regarding the decision of a parent not to have the parent's child participate in the academic assessments."101 Thus, if a state or local law regarding opt-outs exists, the assessment requirements of the ESEA as amended by the ESSA cannot preempt it.102

Although excessive numbers of opt-outs may have consequences for accountability, the primary focus of this discussion is the consequences for assessment itself. Excessive numbers of opt-outs may undermine the validity of the measurement of student achievement and, by extension, may undermine the validity of the state accountability system. Validity may be undermined because a large number of opt-outs could create a scenario in which states are measuring student achievement that is not representative of the whole student population.

To explore this issue, it is important to understand a few basic statistical terms. In statewide assessment systems under the ESEA, states are required to assess the universe of students—that is, all students. In education research, it is more common to assess a representative sample of students. A representative sample of students is carefully selected from the universe of students, and the sample reflects the whole population in terms of certain demographic characteristics (e.g., gender, race/ethnicity, socioeconomic status, disability status, EL status).103 In a representative sample, students with desired demographic characteristics are randomly selected in specific proportions to represent the entire population.

In statewide assessment systems under the ESEA, states are not permitted to select a representative sample of students. Because the ESEA requires that states assess the universe of students, large numbers of students opting out of the assessment may create an unrepresentative sample. Parents of students who choose to opt out are likely not randomly distributed across all demographic characteristics; therefore, it may create an unrepresentative sample. If a state assesses an unrepresentative sample of students, the assessment results used in its accountability system would not accurately reflect the true achievement of the population as a whole (i.e., the universe).

Testing Burden

Both prior to and following the enactment of the ESSA, the ESEA has required states to administer 17 annual assessments across three subject areas (reading, mathematics, and science). These requirements have been implemented within a crowded landscape of state, local, and classroom uses of educational assessments. The emphasis on educational assessment within federal education policies, which has coincided with expanded assessment use in many states and localities, has led to considerable debate about the amount of time being spent taking tests and preparing for tests in schools.104

One common criticism of test-based accountability is that it leads to a narrowing of the curriculum. There are several ways this could occur. First, the time spent administering the actual assessments, sometimes called the "testing burden," could take away from instructional time. Second, test-based accountability may lead to increases in test preparation in the classroom, which also takes away from instructional time. There is some evidence to suggest that teachers feel pressure to "teach to the test" and engage in test preparation activities at the expense of broader, higher-level instruction. Test-preparation activities take several forms, including emphasizing specific content believed to be on the assessments, changing classroom assignments to look like the format of the assessments, or presenting test taking strategies.105 Third, in test-based accountability systems, teachers report reallocating instructional time toward tested subjects and away from nontested subjects. Surveys of teachers have consistently reported that their instruction emphasizes reading and mathematics over other subjects like history, foreign language, and the arts.106

Test preparation can take many forms, and it is difficult to distinguish appropriate test preparation from inappropriate test preparation. Many schools provide test preparation to young students who have little experience with standardized testing, and this form of it can actually increase the validity of a test score because it is less likely that students will do poorly due to unfamiliarity with the testing process. Test preparation begins to affect validity in a negative way, however, when there are excessive amounts of alignment between test items and curricula, excessive coaching on a particular type of item that will appear on the test, or even outright cheating.

Although these efforts are often undertaken with good intentions, overuse of test preparation strategies can lead to score inflation.107 Score inflation is a phenomenon in which scores on high-stakes assessments tend to increase at a faster rate than scores on low-stakes assessments. The validity of the assessment results is reduced when score inflation is present. Studying its prevalence is difficult because LEAs may be reluctant to give researchers access to test scores for the purpose of investigating possible inflation. Several studies, however, have been able to document the problem of score inflation by comparing gains on state assessments (historically, high-stakes) to those made on NAEP108 (low-stakes).109 Studies have consistently reported discrepancies in the overall level of student achievement, the size of student achievement gains, and the size of the achievement gap. These discrepancies indicate that student scores on state assessments may be inflated and that these inflated scores may not represent true achievement gains as measured by another test of a similar construct. In this case, the validity of the conclusions based on state assessments may be questioned.110

The ESSA added new provisions that focus on testing burden and possibly reducing the number of assessments overall (not just those required by Title I-A). Each state may set a target limit on the aggregate amount of time used for the administration of assessments for each grade that is expressed as a percentage of annual instructional hours. For each grade served by the LEA, it must provide information on (1) the subject matter being assessed; (2) the purpose for which the assessment is designed and used; (3) the source of the requirement of the assessment; and (4) the amount of time students will spend taking assessments, the schedule of assessments, and the format for reporting results of assessments (if such information is available).111 In addition, the Secretary may reserve funds under the State Assessment Grant program to provide grants to states for conducting audit assessments that examine whether all of the tests being used in a state are needed, and to provide subgrants to LEAs to conduct a similar examination of assessments used at the LEA level.

Author Contact Information

Acknowledgments

Erin Lomax, former CRS analyst and current independent contractor to CRS, co-authored this report.

Footnotes

| 1. |

While the reading and mathematics assessments are required to be used in state accountability systems, states can choose to include science assessments in the state accountability system. For more information about state accountability systems, see CRS In Focus IF10556, Elementary and Secondary Education Act: Overview of Title I-A Academic Accountability Provisions, by Rebecca R. Skinner. |

| 2. |

Prior to the enactment of the ESSA, the assessment requirements for students with disabilities who were not taking the assessments provided for all other students were specified only in regulation (34 C.F.R. 200.6). |

| 3. |

Competency-based assessments are used as a tool to enable students to demonstrate mastery of academic content, regardless of time, place, or pace of learning. For more information, see U.S. Department of Education, Competency-Based Learning or Personalized Learning, https://www.ed.gov/oii-news/competency-based-learning-or-personalized-learning. |

| 4. |

In addition to the 17 state assessments, in order to receive a grant under Title I-A, each state must also include assurances that the state will participate in biennial 4th and 8th grade reading and mathematics assessments under the National Assessment of Educational Progress (NAEP) (Section 1111(c)(2)). |

| 5. |

For a discussion of summative assessments, see CRS Report R45048, Basic Concepts and Technical Considerations in Educational Assessment: A Primer, by Rebecca R. Skinner. |

| 6. |